When was the last time you audited the security of your Kubernetes clusters? Kubernetes has become the backbone of modern cloud-native infrastructure, but with that great power comes a sprawling attack surface. Misconfigurations, unpatched CVEs, and insecure defaults can lurk in the shadows of your cluster – each a potential breach waiting to happen. In fact, according to Red Hat’s State of Kubernetes Security 2023 report, 67% of teams had to delay deployments due to security concerns, and a whopping 90% experienced at least one security incident in their K8s environments over the previous year. These stats underscore a simple truth: Kubernetes security is both urgent and non-negotiable.

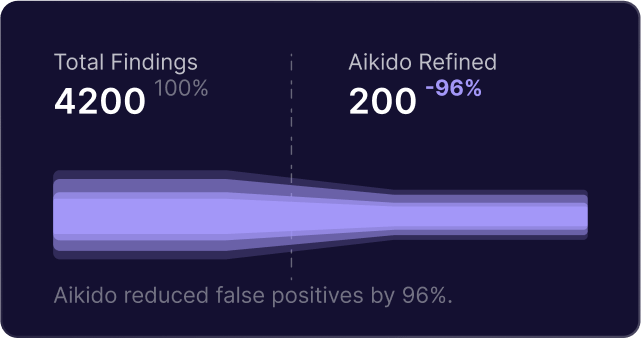

In this article, we’ll break down some of the top Kubernetes security vulnerabilities plaguing DevOps and cloud-native teams today. From recent high-profile CVEs to common configuration pitfalls, we’ll explore what they are, why they’re dangerous, and how you can mitigate them. (Along the way, we’ll also highlight how tools like Aikido can help detect or defend against these issues.) Let’s dive in.

1. Exposed Kubernetes Dashboards and APIs

The Vulnerability: A surprisingly common Kubernetes pitfall is leaving the cluster dashboard or API endpoint exposed to the internet without proper authentication. This is akin to leaving your data center’s front door wide open. Attackers constantly scan for open Kubernetes ports – and if they find an unsecured entry, it’s game over.

Why It’s Dangerous: The infamous Tesla cloud breach is a cautionary tale. In 2018, Tesla’s Kubernetes console was compromised because it wasn’t password protected. Attackers who found the open dashboard were able to deploy crypto-mining containers in Tesla’s cloud, throttle usage to avoid detection, and even route traffic through CloudFlare to hide their tracks. Similarly, in another incident, an exposed Kubernetes cluster at WeightWatchers leaked AWS keys and internal endpoints, potentially exposing millions of users’ data. These real-world breaches show how a basic oversight – not securing your K8s UI/API – can lead to severe consequences.

Mitigation: Always lock down Kubernetes admin interfaces. Never run the Kubernetes dashboard on a public IP without authentication (in fact, avoid using the old dashboard in production at all). Use role-based access control (RBAC) and OAuth/OIDC integration to require login for the API server. Network-wise, restrict API access to trusted IPs or VPNs. Consider using Kubernetes API server audit logs and threat detection to catch unauthorized attempts.

Aikido’s cloud security posture management (CSPM) can help flag if your Kubernetes control plane endpoints are publicly accessible or lacking authentication, so you can remediate before attackers find them.

2. Over-Privileged Access and RBAC Misconfigurations

The Vulnerability: Kubernetes’ RBAC (Role-Based Access Control) system is meant to enforce least privilege, but it only helps if you configure it properly. A common mistake is granting overly broad permissions to users, service accounts, or pods. For example, binding a service account to the cluster-admin role (or using the default service account with high privileges) can turn a single container compromise into a cluster-wide takeover.

Why It’s Dangerous: Assigning broad Kubernetes RBAC roles increases risk – if an attacker gains a foothold in a pod or through stolen credentials, an over-privileged account can let them escalate to cluster-wide access. In one scenario, a compromised application pod with a token tied to a permissive role could let an attacker create new pods, read secrets, or even delete resources. Essentially, a misconfigured RBAC policy can serve as a force multiplier for any breach. Kubernetes does auto-mount a default service account token into pods, which, if not limited, might grant unintended API access. Attackers know to look for these tokens on compromised containers.

Mitigation: Embrace the principle of least privilege for all Kubernetes accounts. Audit your Roles and ClusterRoles – are you granting wildcard (“*”) permissions where you shouldn’t? Define fine-grained roles per application or team, and restrict sensitive actions (like creating pods or secrets) to only those who truly need it. Disable automounting of service account tokens in pods that don’t need API access (set automountServiceAccountToken: false). Tools like Kubernetes Pod Security Standards and Open Policy Agent (OPA) can prevent deployments that use default service accounts or request excessive rights.

Aikido’s K8s security scanner can identify over-permissioned service accounts and RBAC policies. It helps teams spot roles that violate least privilege, so you can tighten them before an attacker exploits the weakness.

3. Running Pods as Root & Privileged Containers

The Vulnerability: Too often, containers in Kubernetes are run with root user privileges or even with the privileged flag enabled, meaning they have essentially full access to the host system. Additionally, some deployments mount host directories (via hostPath volumes) or fail to restrict Linux capabilities. These configurations create a fertile ground for container escape exploits.

Why It’s Dangerous: As one security expert put it, “every privileged container is a potential gateway to your entire cluster.” If a container is running as root and gets compromised, the attacker can attempt to break out of the container isolation. Real-world Linux kernel vulnerabilities demonstrate this risk. For instance, the Dirty Pipe flaw (CVE-2022-0847) allowed a malicious actor to write to read-only files and escalate privileges on the host. Even an unprivileged container could exploit Dirty Pipe to overwrite host binaries like /usr/bin/sudo and gain root access. Other vulnerabilities like CVE-2022-0492 have shown that a privileged container can manipulate cgroups to escape to the host. In Kubernetes, if you run pods without a restrictive security context (no seccomp, no AppArmor, running as UID 0), you’re essentially relying on the Linux kernel alone for isolation – and any kernel bug can shatter it.

Mitigation: Never run application containers as root unless absolutely necessary. Enforce a securityContext in your pod specs: set runAsNonRoot: true, drop all Linux capabilities by default, and avoid privileged: true except for trusted low-level infrastructure pods. Use seccomp and AppArmor profiles (or SELinux in OpenShift) to sandbox what system calls and resources a container can access. Kubernetes now supports Pod Security Standards – apply the “restricted” policy to namespaces to prevent risky configurations. Also beware of dangerous volume mounts: do not mount the Docker socket or host file system into containers (common in DIY “Docker-in-Docker” scenarios).

Aikido’s container scanner checks image and deployment settings – it will flag if a pod spec is running as root or with privileged mode. Aikido can even provide guided fixes, ensuring your deployments adhere to least privilege.

4. CVE-2023-5528 – Windows Node Privilege Escalation

Not all Kubernetes vulnerabilities are Linux-based; Windows nodes have had their share of critical flaws. CVE-2023-5528 is a recent high-severity example. It’s a security issue in Kubernetes where a user with permissions to create pods and PersistentVolumes on a Windows node could escalate to administrator privileges on that node. In plain terms, an attacker who can deploy a pod to a Windows worker could break out and gain control of the Windows host, if the cluster is vulnerable.

This vulnerability specifically involved an “in-tree” storage plugin on Windows. Kubernetes clusters using in-tree volume plugins for Windows (as opposed to CSI drivers) were affected. By crafting a malicious pod+PV combination, an attacker could exploit insufficient input sanitization in the volume plugin to execute code as ADMIN on the Windows node.

Why It’s Dangerous: If an attacker gets admin on a node (Windows or Linux), they effectively compromise all pods on that node and can often move laterally in the cluster (for example, by intercepting service account tokens or manipulating the kubelet). Windows nodes may be less common, but they’re often less monitored in mixed clusters – making a successful exploit a silent killer. CVE-2023-5528 and related bugs (CVE-2023-3676, 3893, 3955) showed that Windows-specific issues can lurk under the radar.

Mitigation: Patch, patch, patch. The fix for CVE-2023-5528 is included in the latest Kubernetes patch releases – upgrade your control plane and kubelets to a version that resolves this issue (check the official CVE bulletin for patched versions). Where possible, migrate from deprecated in-tree storage plugins to CSI drivers, which receive more scrutiny and updates. Also, limit who can create pods and PersistentVolumes in the first place (tie back to RBAC best practices).

Aikido’s vulnerability management feed tracks Kubernetes CVEs – using Aikido, you would be alerted if your cluster version is affected by CVE-2023-5528 or similar issues. Its Kubernetes scanner can also detect outdated components or risky configurations on Windows nodes, prompting you to update before attackers strike.

5. CVE-2024-10220 – Host Execution via gitRepo Volumes

Kubernetes is deprecating some legacy features for a reason. A case in point: the gitRepo volume type. CVE-2024-10220 is a critical vulnerability in Kubernetes’ deprecated gitRepo volume mechanism. It allows an attacker with rights to create a pod using a gitRepo volume to execute arbitrary commands on the host (node) with root privileges. In essence, by deploying a cunningly crafted pod that uses a gitRepo volume, an attacker could break out of the container and run code on the host – achieving full system compromise.

Why It’s Dangerous: The gitRepo volume feature clones a Git repository into a pod at startup. The CVE-2024-10220 flaw arises from Kubernetes not sanitizing the repository content. An attacker could include malicious Git hooks or submodules in a repo such that when Kubernetes pulls it, those hooks execute on the node (not just inside the pod). This means with a single kubectl apply, a low-privileged user might turn a gitRepo volume into a backdoor on the node. What’s scarier is that gitRepo volumes, though officially deprecated, may still be enabled on older clusters – a ticking time bomb if not addressed.

Mitigation: If you haven’t already, disable the gitRepo volume type on your clusters, or upgrade to Kubernetes versions where it’s removed or patched (the fix for CVE-2024-10220 was included in Kubernetes v1.28.12, v1.29.7, v1.30.3, and v1.31.0 per advisories). Use more secure alternatives: for example, clone Git repos in an init container and mount the result, instead of letting the kubelet do it via gitRepo. And again, restrict who can create pods with such volume types – if untrusted users can create workloads, consider a policy (OPA or admission controller) to deny use of deprecated or dangerous volume plugins.

Aikido’s platform keeps an eye on deprecated or risky configurations. It would flag if any deployment is using the gitRepo volume type, and guide you to safer patterns. By continuously scanning your IaC and cluster configs, Aikido helps ensure features like gitRepo don’t slip through the cracks.

6. Vulnerabilities in Third-Party Add-ons (Ingress, CSI & More)

One of Kubernetes’ strengths is its extensible ecosystem – ingress controllers, CNI plugins, CSI drivers, operators, and so on. But each added component can introduce new vulnerabilities. Studies show that the vast majority of Kubernetes CVEs actually stem from ecosystem tools rather than Kubernetes core. Between 2018 and 2023, about 59 out of 66 known K8s-related vulnerabilities were in external add-ons, not the Kubernetes project itself. In other words, your cluster is only as secure as its weakest plugin.

Examples: Several critical flaws have been discovered in widely used components:

- Ingress Controllers: In 2023, an exploit was found in the popular NGINX ingress controller. CVE-2023-5044 allowed code injection via a malicious annotation on an Ingress object, and a related CVE-2023-5043 could lead to arbitrary command execution. An attacker with the ability to create or edit Ingress resources could leverage these bugs to compromise the controller pod – and by extension, potentially the cluster. (Ingress controllers often run with elevated privileges or access to all cluster namespaces.)

- CSI & Storage Plugins: Storage drivers have had issues too. For instance, a vulnerability in an Azure File CSI driver (CVE-2024-3744) was found to leak Kubernetes secrets in log files. Bugs in other drivers or tools (like cross-account role handling in cloud controllers) can similarly expose sensitive info or allow escalation.

- Helm Charts / Operators: Misconfigured Helm charts or operator permissions can create insecure defaults. While not CVEs in the Kubernetes code, they are security gaps in how we extend K8s (for example, an operator running with cluster-admin rights can be a single point of failure if compromised).

Mitigation: Treat your cluster add-ons as part of your attack surface. Keep your ingress controllers, CSI drivers, CNI plugins, etc. up-to-date with security patches. Subscribe to their security advisories. Wherever possible, run these components with least privilege as well – e.g., if an ingress controller only needs to watch certain namespaces, scope its RBAC accordingly. Use Namespace restrictions or admission controllers to ensure that only trusted sources can install high-privilege operators. It’s also wise to periodically scan your cluster for known vulnerable images: for example, ensure you’re not running a version of ingress-nginx known to be exploitable.

7. Exposed Secrets and Poor Secrets Management

The Vulnerability: Secrets are the crown jewels in any environment – API keys, credentials, certificates, etc. Kubernetes provides a built-in Secrets object, but using it incorrectly (or storing secrets elsewhere insecurely) can lead to leakage. Common mistakes include hard-coding secrets in container images or config files, failing to encrypt secrets at rest, or overly broad access to secrets in the cluster. Even when using Kubernetes Secrets, teams sometimes expose them by mounting in pods where not needed or by not restricting who can list or read them.

Why It’s Dangerous: If an attacker obtains a secret, they may not need any other exploit to harm you – they can directly access sensitive resources. One report (IBM’s 2025 Cost of a Data Breach) noted that breaches involving stolen or leaked credentials are among the costliest and hardest to detect. In Kubernetes, secrets by default are only base64-encoded, not encrypted. As a community post put it, “relying on base64 encoding for secrets… remember, encoding isn’t encryption!”. This means if an attacker gets access to your etcd data or snapshots (or even overly verbose logs), they can decode all your “Secrets” with trivial effort. There have also been Kubernetes bugs in this domain – for example, CVE-2023-2728 allowed bypassing the mountable secrets policy on service accounts, and other bugs (CVE-2023-2878) in certain secret store CSI drivers leaked tokens in logs. All of these scenarios end the same way: secrets in plain text, in the wrong hands.

Mitigation: Use robust secret management practices. Enable encryption at rest for Kubernetes Secrets (a config option for the API server to encrypt secrets in etcd with a key). Limit which applications or pods actually get access to each secret – avoid mounting secrets in every pod in a namespace just because it’s easy. Use external secret stores or operators (like HashiCorp Vault or Kubernetes Secrets Store CSI driver) to integrate more secure secret storage. Scan your container images and code for any hard-coded credentials or tokens before they reach production. Kubernetes 1.27+ also supports secret immutability and improved logging redaction – use these features so secrets don’t accidentally appear in logs or debug endpoints.

Aikido provides live secret detection features – it can scan your code, config, and even container layers for API keys, passwords, and other sensitive strings. This helps catch accidentally exposed secrets early. Additionally, Aikido can monitor your environments so that if a secret is leaked (say, committed to an image), you’re immediately alerted to rotate it.

8. Untrusted and Vulnerable Container Images

The Vulnerability: The software inside your containers can be just as big a risk as misconfigurations in Kubernetes. Running a vulnerable container image – for example, one with outdated libraries or known CVEs – means your application is a target for exploitation. Moreover, pulling images from untrusted sources (public registries or random Docker Hub images) can introduce malware or backdoors. In Kubernetes, developers often use base images and third-party images liberally; without scanning, this supply chain can smuggle in severe vulnerabilities.

Why It’s Dangerous: Recent studies indicate that a significant percentage of container images contain vulnerabilities – some reports show over 50% of Docker images carry at least one critical flaw. That means if you’re not scanning your images, odds are you’re deploying known exploitable issues. For example, consider a critical CVE in a popular open-source library (think Log4j in late 2021). Attackers will automatically scan the internet for any service using that library. If your container has it, and it’s reachable, they will attempt to exploit it. Kubernetes doesn’t magically shield you from that – if anything, the ease of deployment might lead to running many instances of vulnerable apps. Additionally, there have been instances of malicious images (typosquatting official ones, or images that promise a useful tool but actually include a cryptominer). If such an image is pulled into your cluster, your entire environment’s integrity is at risk.

Mitigation: The remedy here is two-fold: use trusted images and keep them updated, and continuously scan for vulnerabilities. Avoid using the :latest tag for images, as it can lead to indeterminate, unpatched versions being used. Instead, pin to specific versions or digests that you’ve vetted. As Aikido’s experts say, point to specific, trusted versions (e.g., FROM ubuntu:20.04-<date>) instead of using tags like latest, and remember to consistently scan using tools like Aikido to detect CVEs and apply fixes. Adopt an image vulnerability scanning tool in your CI/CD pipeline to catch known issues before deployment. Kubernetes itself can help with admission controllers that reject images failing policy (e.g., not scanned or with high-sev vulns). Also, periodically review running workloads – if an image hasn’t been rebuilt in a while, it likely has accumulated known CVEs; rebuild it with updated packages. Finally, enforce image provenance: use signed images (Docker Content Trust or Sigstore Cosign) so you don’t accidentally run tampered images.

Aikido’s container image scanning integrates into CI and registries to automatically find vulnerabilities in your images. It leverages a rich vulnerability database (including the latest CVEs from 2023–2024) and even offers AI AutoFix suggestions for some issues. By using Aikido, DevOps teams can ensure no image is deployed without being scanned for known flaws – and even better, get guidance on updating or patching those images.

9. Insufficient Network Segmentation (Lateral Movement)

The Vulnerability: Out of the box, Kubernetes allows pods to communicate with each other freely within a cluster. Every pod can reach every other pod (and service) by default. While this makes for a flexible microservices architecture, it also means that if an attacker compromises one pod, they can scan and pivot to other pods easily – this is called lateral movement. The lack of internal network segmentation (unless you configure NetworkPolicies) is a security risk. Even if you do configure NetworkPolicies, they must be done correctly cluster-wide; any gap can be an attack pathway.

Why It’s Dangerous: Think of a Kubernetes cluster with no network restrictions as an open-plan office with no walls – great for collaboration, terrible for security. “Pods and services communicate freely, making lateral movement a breeze for attackers,” as one engineer noted. An intruder who gets a foothold in one container might start probing the entire cluster: accessing an open database here, hitting an internal admin service there, or exploiting a vulnerable service deep in the cluster that was never meant to be exposed. We’ve also seen specific vulnerabilities that worsen this problem. For example, a recent Kubernetes bug CVE-2024-7598 allowed a malicious pod to bypass network policy restrictions during a namespace termination race condition. In other words, even if you thought you had locked down inter-pod traffic, a clever attacker could slip through during a corner-case scenario. This underscores that relying on defaults (or even a few policies) is not enough – you need a defense-in-depth approach for network security.

Mitigation: Implement Network Policies to segment your cluster network. At minimum, adopt a default deny policy for inter-namespace traffic and then explicitly allow required flows (e.g., front-end namespace can talk to backend namespace on port X, but nothing else). For clusters handling multiple tenants or teams, use stronger isolation like separate network segments or even separate clusters for sensitive workloads. Consider using a service mesh or zero-trust networking model where every service-to-service call is authenticated and authorized. Monitor network traffic for anomalies – unusual connections could mean an attacker is mapping out your cluster. And of course, keep your Kubernetes version updated; when network-related CVEs like the above are disclosed, apply patches promptly so attackers can’t exploit them.

Aikido’s runtime defense capabilities include monitoring of network activity between pods. It learns normal communication patterns and can raise alerts or block connections when an abnormal, potentially malicious communication occurs. This kind of monitoring is an excellent backstop in case a NetworkPolicy is misconfigured or a new attack vector (like a policy bypass) is discovered.

Conclusion: Strengthening Your Kubernetes Posture

Kubernetes security is a wide-ranging challenge – as we’ve seen, it spans everything from configuration mistakes to zero-day CVEs in the underlying container runtime. The key takeaway is that awareness and proactive measures go hand in hand. By understanding these top vulnerabilities – and, importantly, how attackers exploit them – you can prioritize securing those weak links in your cluster before they’re hit.

Start by addressing misconfigurations: enforce least privilege (no more overly broad RBAC roles or root containers), lock down access points (dashboards, APIs, etc.), encrypt and manage your secrets properly, and segment your network. In parallel, keep up with patches for Kubernetes itself and its ecosystem components; when new CVEs (like those from 2023–2024 we discussed) come out, evaluate your exposure and update swiftly. Integrate security into your CI/CD: scan images for vulnerabilities and secrets, and don’t deploy what you haven’t vetted.

Finally, equip your team with the right tools. Kubernetes may be complex, but you don’t have to secure it alone. Platforms like Aikido can act as your security sidekick – from scanning infrastructure-as-code for misconfigurations, to monitoring running clusters for threats, to suggesting fixes with AI assistance. The threats to K8s are real and evolving, but with vigilance and smart tooling, you can stay ahead. Secure your Kubernetes clusters now, and you’ll keep that agility and power that K8s provides without losing sleep at night over what might be lurking in your pods.

Continue reading:

Top 9 Docker Container Security Vulnerabilities

Top 7 Cloud Security Vulnerabilities

Top 10 Web Application Security Vulnerabilities Every Team Should Know Top Code Security Vulnerabilities Found in Modern Applications

Top 10 Python Security Vulnerabilities Developers Should Avoid

Top JavaScript Security Vulnerabilities in Modern Web Apps

Top 9 Software Supply Chain Security Vulnerabilities Explained

Secure your software now

.avif)