The rise of AI in cybersecurity is impossible to ignore. Large language models (LLMs) like GPT-4 have taken center stage, showing up in everything from code assistants to security tools. Penetration testing – traditionally a slow, manual process – is now seeing an AI-driven revolution. In fact, in a recent study 9 out of 10 security professionals said they believe AI will eventually take over penetration testing. The promise is alluring: imagine the thoroughness of a seasoned pentester combined with the speed and scale of a machine. This is where the concept of “Pentest GPT” comes in, and it’s changing how we think about offensive security.

But what exactly is Pentest GPT? And just as importantly, what isn’t it? Before you picture a glorified ChatGPT script magically hacking systems, let’s clarify the term and explore how LLMs are being woven into the pentesting process. We’ll also look at the hard limits of GPT-powered pentests – from AI hallucinations to context gaps – and why human expertise remains vital. Finally, we’ll see how Aikido Security’s approach (our continuous AI-driven pentest platform) tackles these challenges differently with human validation, developer-friendly outputs, and CI/CD integration. Let’s dive in with a calm, pragmatic look at this new era of AI-assisted penetration testing.

What Does “Pentest GPT” Really Mean (and Not Mean)?

“Pentest GPT” refers to the application of GPT-style language models to penetration testing workflows. In simple terms, it’s about using an AI brain to emulate parts of a pentester’s job – from mapping out attack paths to interpreting scan results. However, it’s not as simple as taking ChatGPT, slapping on a hacker hoodie, and expecting a full penetration test from one prompt. The distinction matters.

General models like ChatGPT are trained on broad internet text and can certainly explain security concepts or brainstorm attacks, but they lack specialized offensive security knowledge by default. They weren’t built with intimate understanding of exploit frameworks, CVE databases, or the step-by-step workflow a real pentester follows. In contrast, a true Pentest GPT system is typically fine-tuned for security. It’s trained on curated data like vulnerability write-ups, red-team playbooks, exploit code, and real pentest reports. This specialization means it “speaks” the language of hacking tools and techniques.

Another key difference is integration. Pentest GPT isn’t just an isolated chatbot – it’s usually wired into actual security tools and data sources. For example, a well-designed Pentest GPT might plug into scanners and frameworks (Nmap, Burp Suite, Metasploit, etc.) so it can interpret their output and recommend next steps. It serves as an intelligent layer between tools, not replacing them outright. A helpful analogy from one commentary: ChatGPT might give you a nice summary of what SQL injection is, whereas Pentest GPT could walk you through finding a live SQL injection on a site, generate a tailored payload, validate the exploit, and even suggest a fix afterward. In short, Pentest GPT is more than just “ChatGPT + a prompt = pentest.” It implies a purpose-built AI assistant that understands hacking context and can act on it.

It’s also worth noting what Pentest GPT is not. It’s not a magic one-click hacker that renders all other tools obsolete. Under the hood, it still relies on the usual arsenal – scanners, scripts, and exploits – but uses the LLM to tie everything together. Think of it as an amplifier for automation: it adds reasoning and context to the raw results that traditional automated tools output. And despite the catchy name, “PentestGPT” in practice isn’t a single product or AI model, but a growing category of approaches. Early prototypes like PentestGPT (an open-source research project) and AutoPentest-GPT have demonstrated multi-step testing guided by GPT-4, and established security platforms (like Aikido) are now embedding GPT-powered reasoning into their pentest engines. The field is evolving fast, but the core idea remains: use LLMs to make automated pentesting smarter and more human-like in its thinking.

How LLMs (Like GPT-4) Are Used in Penetration Testing

Modern penetration testing involves more than running a scanner and dumping a report. Skilled testers chain together multiple steps – from reconnaissance to exploitation to post-exploitation – often improvising based on what they find. LLMs are proving adept at assisting (or even autonomously performing) several of these phases. Here are some of the key roles GPT-driven AI plays in the pentesting process:

1. Path Reasoning: Connecting the Dots Between Vulnerabilities

One of the most powerful abilities of an AI like GPT-4 is to plan attacks across multiple steps, almost like a human strategist. For example, a typical vulnerability scanner might tell you “Server X is running an outdated service” and separately “User database has weak default credentials.” It’s up to a human pentester to realize these two findings could be combined – log into the database with default creds and pivot to exploit the outdated server for deeper access. LLMs excel at this kind of reasoning. A Pentest GPT can automatically connect the dots into an attack path, identifying that a chain of lower-severity issues, when used together, could lead to a major compromise (e.g. domain admin rights or full application takeover). This “big picture” synthesis is something rule-based tools rarely do, but a GPT model can, by virtue of its contextual understanding. In practice, this means AI-driven pentest tools can provide attack narratives, not just isolated findings. They explain how a minor misconfiguration plus a leaked API key could be escalated into a critical breach, giving developers and security teams much clearer insight into risks.

2. Attack Simulation: Crafting and Executing Exploits

LLMs like GPT-4 are also used to simulate attacker actions during a pentest. This goes beyond just pointing out a vulnerability – the AI can help execute the exploitation steps (in a controlled manner). For instance, if the system suspects an SQL injection in a web form, an AI agent can generate a tailored payload for that specific form and attempt to retrieve data. If it finds a command injection, it can attempt to spawn a shell or extract sensitive info, just as a human would. The model can draw on its training (which includes tons of exploit examples) to craft input strings or HTTP requests on the fly. This ability to adapt and create attack payloads saves a lot of manual scripting. It essentially lets the AI act as an exploit developer and operator. Just as importantly, a good Pentest GPT will validate the exploit’s effect – for example, confirming that the SQLi actually dumped the database or that the command injection gives remote code execution – rather than blindly trusting its first attempt. In Aikido’s platform, for example, once an agent discovers a potential issue, it automatically runs additional checks and payloads to prove the finding is exploitable, ensuring the result isn’t a false alarm. This kind of AI-driven attack simulation brings automated testing much closer to what a creative human attacker would do: try something, see the response, adjust tactics, and pivot to the next step.

3. Chaining Steps: Adaptive Multi-Stage Attacks

Pentesting is rarely a single-step affair; it’s a chain of actions and reactions. LLMs are being used to orchestrate multi-stage attack chains in an adaptive way. Consider a scenario: an AI agent starts with reconnaissance, finds some open ports and a leaked credential, then uses GPT-powered logic to decide the next move – maybe use the credential to login, then run a privilege escalation exploit on the target system, and so on. Unlike traditional tools that follow a fixed script, an LLM-guided system can make decisions on the fly. If one avenue is blocked (say a login fails or a service isn’t exploitable), it can dynamically change course and try a different path, much like a human would. Researchers describe this as an “agentic” approach: multiple AI agents handle different tasks (recon, vuln scanning, exploitation, etc.) and pass information to each other, coordinated by the LLM’s reasoning. The result is an automated pentest that maintains context across steps and learns as it goes. For example, early-stage findings (like a list of user roles or an API schema) can inform later attacks (like testing role-based access control). GPT-4’s natural language reasoning helps here by interpreting unstructured data (docs, error messages) and incorporating that knowledge into subsequent exploits. This chaining ability was traditionally a human-only domain. Now, AI agents can handle many of these logical transitions: recon → exploit → post-exploit → cleanup, chaining multiple techniques to achieve a goal. It’s not foolproof, of course – complex business logic or novel attack paths can still trip up an AI – but it’s a big leap in capability. Notably, this is how Aikido’s AI pentest operates: dozens or hundreds of agents swarm the target in parallel, each focusing on different angles, and the system uses an LLM-driven brain to coordinate their progress through a kill-chain (discovery, exploitation, privilege escalation, etc.). The outcome is a far more thorough exercise where the AI can escalate findings step by step, instead of stopping at a laundry list of separate issues.

Limits of GPT-Powered Pentesting: Hallucinations, Context Gaps, and the Human Factor

With all the excitement around AI pentesting, it’s important to address the limits and why humans aren’t out of the loop yet. LLMs are powerful, but they have well-documented weaknesses that matter in a security context. Here are some key limitations of “GPT-powered” pentests and why seasoned human experts still play a crucial role:

- Hallucinations and False Positives: GPT models sometimes produce information that seems plausible but is incorrect – a phenomenon known as hallucination. In pentesting, this could mean an AI incorrectly flags a vulnerability that isn’t actually there or misinterprets benign behavior as malicious. For example, a GPT might concoct a fictitious “CVE-2025-9999” based on patterns it’s seen, or mistakenly conclude a system is vulnerable because it expects a certain response. These false positives can waste time and erode trust in the tool. Rigorous validation is needed to counter this. (In Aikido’s system, for instance, no finding is reported until it’s validated by a real exploit or check – the platform will rerun the attack or test payload automatically to make sure the issue is reproducible.) This kind of safeguard is essential because an LLM, left unchecked, might otherwise talk itself into finding ghosts.

- Lack of Deep Context or Fresh Knowledge: An LLM’s knowledge is bounded by its training data. If the model hasn’t been updated recently, it might miss newly disclosed vulnerabilities or techniques – e.g., an exploit published last month won’t be known to a model trained last year. Moreover, GPTs don’t inherently know your specific application’s context. They don’t have the intuition or familiarity a human tester might develop after manually exploring an app for days. If not provided with sufficient context (like source code, documentation, or authentication credentials), an AI agent might overlook subtle logic flaws or misunderstand the importance of certain findings. Essentially, GPT has breadth of security knowledge but not innate depth about your environment. Providing the AI with more context (like connecting repository code or user flow descriptions) can mitigate this, but there’s still a gap between knowing a lot of general info and truly understanding a bespoke target system. This is one reason human judgment is still crucial – a skilled tester can spot oddities or higher-level business logic issues that an AI not tuned into the business might not grasp. (That said, interestingly, when Aikido’s AI is given code and context, it has even uncovered complex logic vulnerabilities like multi-step workflow bypasses that human testers missed. Context is king for both AI and humans.)

- Over-Reliance on Patterns: Traditional pentest AIs might lean on known attack patterns and playbooks. If something falls outside those patterns, the AI could struggle. For instance, a novel security mechanism or an unusual cryptographic implementation might confuse the model, whereas a human might investigate it creatively. GPT-4 can certainly generalize and even be creative, but at the end of the day it follows the statistical patterns in its training. This means edge-case vulnerabilities or highly application-specific flaws (think abuse of an application feature in a way no one has written about publicly) are harder for it to find. Humans, with their intuition and ability to handle ambiguity, still shine in uncovering those weird, one-off issues.

- Ethical and Scope Constraints: A practical consideration – GPT models won’t inherently know the ethical boundaries or scope limitations of a pentest unless explicitly controlled. A human pentester is cognizant of not disrupting production, avoiding data destruction, etc. An autonomous agent might need strict guardrails (and indeed, good platforms provide safe-mode settings and scope definitions to keep AI agents on target and non-destructive). While this is more a platform design issue than a flaw in GPT itself, it underscores that human oversight is needed to ensure the AI operates safely and within agreed rules of engagement.

- The Need for Human Interpretation and Guidance: Finally, even when the AI does everything right, you often need a human to interpret results for strategic decisions. For example, deciding which vulnerabilities truly matter for the business or brainstorming how an attacker might exploit a finding beyond what was automatically done might require a human’s touch. There’s also the aspect of trust – many organizations want a human security expert to review an AI-generated pentest report, both to double-check it and to translate it into business terms where needed. AI can generate a lot of data; human expertise is needed to prioritize and plan remediation in a broader security program context.

In summary, GPT-powered pentesting is a force multiplier rather than a replacement for humans. It can handle the heavy lifting of routine attacks, cover more ground, and do it continuously. But humans still set the goals, handle the novel cases, and provide critical judgment on risk. As one observation put it, the best results come when GPT is paired with deterministic tools and human oversight – the AI does reasoning and reporting, while tools and people ensure reliable validation. Most teams adopting AI pentesting use it as a foundational layer and then add human review for the final mile. That way, you get the efficiency of AI and the wisdom of human experts working in tandem.

Aikido’s Approach: Continuous AI Pentesting with a Human Touch

At Aikido Security, we’ve embraced AI in pentesting through our platform (called Aikido “Attack”), but we’ve done so with careful attention to the above limits. The goal is to leverage LLMs for what they do best – speed, scale, and reasoning – while mitigating their weaknesses. Here’s how Aikido’s AI-driven pentests differ from a basic “Pentest GPT” script or traditional automated tools:

- Continuous, On-Demand Testing (CI/CD Integration): Aikido enables you to run penetration tests whenever you need – even on every code change. Instead of a yearly big-bang pentest, you can integrate AI-driven security testing into your CI/CD pipeline or staging deployments. This means new features or fixes get tested immediately, and security becomes a continuous process rather than a one-off event. Our platform is built for developers’ workflows, so you can trigger a pentest on a pull request or schedule nightly runs. By the time your app goes to production, it’s already been through an AI-powered gauntlet of tests. This continuous approach addresses the speed gap where code often changes daily but manual pentests happen rarely. With AI agents, the testing keeps up with development.

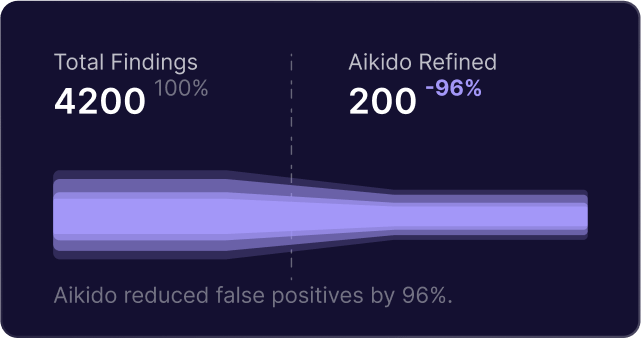

- Validated, Noise-Free Results: We recognize that an AI’s output needs verification. Aikido’s pentest engine has built-in validation at every step. When the AI suspects a vulnerability, it doesn’t report it immediately – it launches a secondary validation agent to reproduce the exploit in a clean way and confirm the impact. Only proven, exploitable issues make it into the final report. This design means you get virtually zero false positives (if an issue isn’t confirmed, it’s not reported) and our system actively guards against AI hallucinations about vulnerabilities. The result is that developers aren’t bombarded with “possible issue” alerts or speculative findings – they see actual confirmed security holes with evidence. This approach marries the creativity of GPT with the caution of a human tester: every finding is essentially double-checked, so you can trust the output.

- Full Visibility and Developer-Friendly Output: Aikido’s AI pentest doesn’t operate as a black box. We give you full visibility into what the AI agents are doing – every request, payload, and attack attempt can be observed live in our dashboard. This is crucial for developer trust and learning. You can see why a vulnerability was flagged and how it was exploited, down to request/response traces and even screenshots of the attack in progress. The final results come in an audit-ready report that includes all the technical details (affected endpoints, reproduction steps, timestamps) as well as plain-language risk descriptions and remediation guidance. We aim to make the output developer-friendly: instead of a vague “Vulnerability in module X” you get a clear explanation of the issue, how to reproduce it, and how to fix it. We even go a step further – our platform includes an AutoFix feature that can take certain findings (like a detected SQL injection or command injection) and automatically generate a Git pull request with the proposed code changes to fix the issue. Developers can review this AI-generated fix, merge it, and then have Aikido retest the application immediately to verify the vulnerability is resolved. This tight find→fix→retest loop means faster remediation and less back-and-forth. All of this is done in a way that developers can easily digest, avoiding security jargon or endless raw scanner output. It’s about making the pentest results actionable.

- Human Expertise in the Loop: While our pentesting agents operate autonomously, we haven’t removed the human element – we’ve augmented it. First, the system itself was trained and fine-tuned with input from senior penetration testers, encoding their workflows and knowledge. But beyond that, we encourage and support human validation where it counts. Many Aikido customers use the AI findings as a baseline and then have their security team or an Aikido analyst do a quick review, especially for critical applications. Our experience has shown that the AI will catch the vast majority of technical issues (and even many tricky logic flaws) on its own. Yet, we know security is ultimately about defense-in-depth – so a human sanity check can add assurance, and we make it easy to collaborate around the AI’s results. Moreover, if the AI run finds nothing critical (which is great news), organizations have peace of mind with our policy that “Zero Findings = Zero Cost” for certain engagements. This guarantee reflects our confidence in the AI’s thoroughness, but also ensures that if a human later finds something the AI missed, you haven’t paid for an incomplete test. In short, Aikido’s approach combines AI automation with human oversight options to deliver the best of both worlds.

- Safety and Scope Control: Aikido Attack was built with enterprise needs in mind, so we added robust controls to keep the AI on track. Before an AI pentest runs, you define the exact scope: which domains or IPs are allowed targets, which are off-limits (but may be accessed in read-only ways), authentication details, and even time windows for testing. The platform enforces these with an integrated proxy and “pre-flight” checks – if something’s misconfigured or out of scope, the test won’t proceed, preventing accidents. There’s also an instant “panic button” to halt testing immediately if needed. These measures ensure that an autonomous test never goes rogue and only performs safe, agreed-upon actions, much like a diligent human pentester would. For more on how we protect your environment, see Aikido’s security architecture.

Overall, Aikido’s AI-driven pentest solution addresses the promise of Pentest GPT while solving its pitfalls. You get continuous, intelligent pentesting that can pivot and reason through attacks like a human, without the typical wait or cost of manual testing. At the same time, you don’t get the usual noise of automation – every finding is real and comes with context. And you still have the option (and we encourage it) to involve security engineers for final validation or to handle the edge cases, ensuring nothing slips through. It’s a balanced, pragmatic application of AI: use the machine for what it does best (speed, scale, pattern recognition) and let humans do what they do best (creative thinking and big-picture judgment). The end result is a pentest process that is faster and more frequent, but also thorough and reliable.

From Hype to Reality: Try AI-Powered Pentesting Yourself

Explore external resources and tools referenced in this approach:

- Nmap – Open-source network mapper used for reconnaissance.

- Burp Suite – Web vulnerability scanner and proxy used in many AI-driven pentests.

- Metasploit – Penetration testing framework for exploit development and execution.

- CVE Database (NVD) – The National Vulnerability Database for tracking security flaws.

- PentestGPT GitHub – Open-source Pentest GPT research project.

- AutoPentest-GPT Project – Automated pentesting framework built on GPT technology.

To see how you can bring continuous and intelligent pentesting to your organization, get started with Aikido in 5 minutes or read customer success stories about organizations already reducing risk with AI.

For more educational content on secure development, DevSecOps, and AI in security, browse the Aikido Blog.

AI won’t replace human security experts – but it will make their work more efficient and help organizations secure software at a pace that matches modern development. LLMs are proving they can take on much of the grunt work of penetration testing, from scouring an app for weaknesses to writing exploits and compiling reports. As we’ve discussed, the term Pentest GPT signifies this new breed of tools that fuse AI reasoning with hacking know-how. It’s not just hype; it’s already reshaping how pentests are done, turning a once-yearly ordeal into a continuous, developer-friendly practice.

If you’re curious about seeing this in action, why not give Aikido’s AI Pentest a try? We offer a way to run a free, self-serve pentest on your own application to experience how autonomous agents work alongside your development cycle. Within minutes, you can configure a test and watch AI agents systematically probe your app – with full transparency and control. You’ll get a detailed report in hours (not weeks), and you can even integrate it into your CI pipeline so that each new release is tested automatically. It’s a chance to witness how Pentest GPT concepts – path reasoning, smart exploitation, result validation – come together in a real product.

Pro tip: You can start an AI-driven pentest on Aikido for free (no credit card required) or [learn more on our website] about how it works. We’re confident that once you see the AI find and fix vulnerabilities at machine speed, you’ll agree this is a calmer, smarter way to keep your software safe.

In summary, AI is here to stay in cybersecurity. The key is using it wisely. Pentest GPT, as a concept, is about augmenting human expertise with AI’s incredible capabilities – not replacing that expertise. Aikido’s mission is to deliver continuous AI-driven pentests with human validation built in, so you can catch issues early, often, and with confidence. As the industry evolves, those who combine the efficiency of AI with the ingenuity of human intelligence will be best positioned to secure their systems against the ever-changing threat landscape. The future of pentesting is being written now – and it’s being written in part by GPT-4.

(Interested in taking the next step? You can get started with an AI pentest in 5 minutes on Aikido’s platform or schedule a demo to see how it fits into your DevSecOps workflow. Security testing doesn’t have to be slow or siloed – with the right AI tools, it becomes a continuous part of development, empowering your team to build and ship software with peace of mind.)

Secure your software now

.avif)