Cloud security isn’t new. In fact, it’s been around for a good number of years now.

However, every year as time goes by, the cloud becomes more complex, and with that complexity comes new risks.

Ten years ago, the cloud was just about storage and compute. But today, APIs, identities, AI workloads, and entire businesses are built in the cloud. This just shows that the attack surface is never shrinking; it just keeps growing.

Yet, despite this, many organizations still treat cloud security like a checklist. It goes something like this: Encrypt this. Add MFA. Run a scan once in a while.

When in reality, you know, as I do, that all it takes is one misconfiguration to slip through. One permission sprawl. One instance of shadow IT creeping in. And that's all an attacker needs to find a gap.

One way to make sure you don’t fall into that trap is to follow cloud security best practices. Not just as a cliché, but as a foundational approach to building and maintaining a secure cloud environment.

In this article, you will learn 25 cloud security best practices every organization should follow to help you stay ahead of threats and protect your data, applications, and users.

But first, let’s understand why cloud security is both important and challenging.

Why is Cloud Security Important and Challenging?

The move from on-prem to the cloud changed everything. Suddenly, teams could get the hardware they needed in minutes instead of months, and with almost no upfront cost. Although that speed unlocked innovation, it also unlocked new risks.

Back in the on-prem days, security teams had tight control over physical servers, networks, and who got access. In the cloud, however, that control is shared.

Engineers can spin up resources with a few clicks, and workloads run across regions, accounts, and even providers. That kind of democratization is powerful, but it also means the attack surface is wider than ever. And remember: cloud security runs on the shared responsibility model.

The real challenge emerges from two things: scale and complexity. You’re no longer securing one fixed environment. At scale, you’re securing hundreds of ephemeral containers, serverless functions, and services, all spinning up and shutting down by the minute.

Now, when you layer in compliance requirements, multi-cloud setups, and the constant pressure to ship faster, it’s not hard to see why cloud security is both crucial and difficult to get right.

Cloud Security Governance & Responsibility Best Practices

When it comes to cloud security, one of your biggest enemies is confusion. If no one knows who owns what, gaps start to appear. That’s why governance and responsibility are the first best practices to get right.

1. Understand the Shared Responsibility Model Beyond the Cloud Provider

The shared responsibility model isn’t one-size-fits-all; it depends on the type of cloud environment you’re using. For example, an organization running an IaaS setup has far more responsibility than a six-month-old AI startup building on FaaS.

The image below, by the Center for Internet Security (CIS), illustrates the level of responsibility a cloud customer has.

Beyond the above image, it's important to remember that security incidents do not happen in isolation. Which means cloud security isn’t just “the security team’s job.” It’s a collective effort across engineering, operations, and leadership.

So with that in mind, how does one embrace this shared responsibility model without it turning into a blame game?

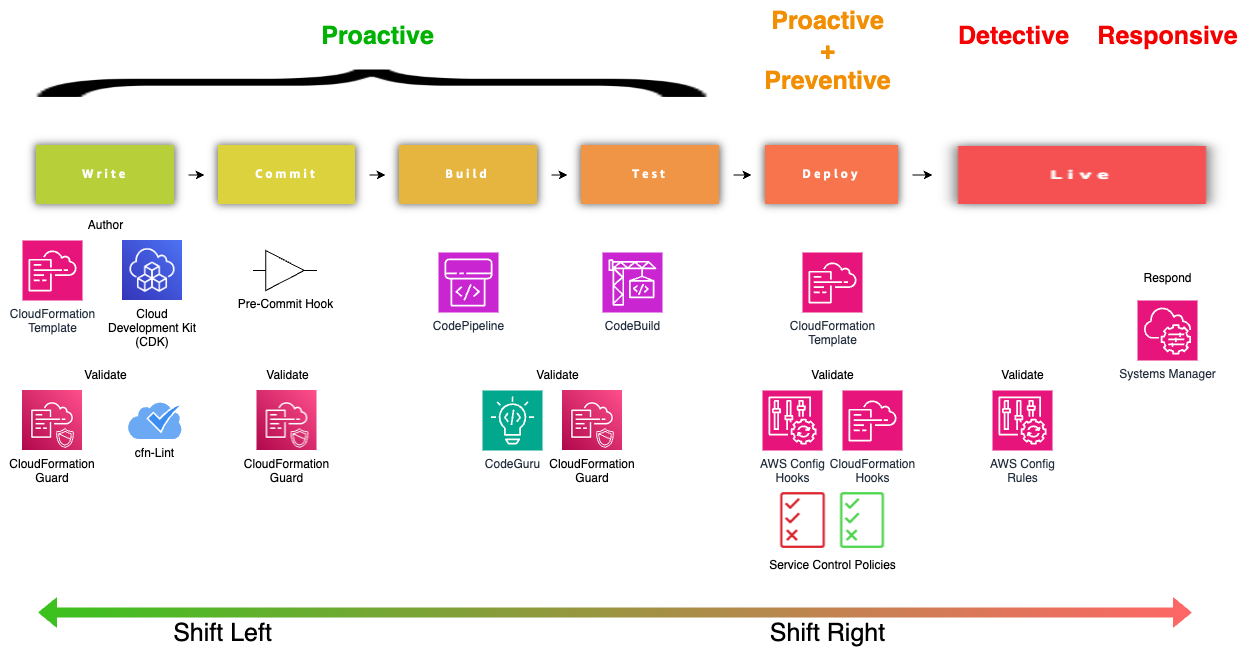

The answer is...drumrolls…“Shift Left”.

Maybe you guessed it, maybe you didn’t. Regardless, “Shift Left” is more than a buzzword. The code developers write, the infrastructure, and everything in between are all potential attack vectors and make no difference to a malicious actor.

So, instead of worrying about security only at runtime, you should start worrying from the first line of code.

In the event that incidents do happen at any stage of the software development lifecycle (SDLC), the SRE's idea of a blameless postmortem comes in handy to learn from that failure.

Best practices to adopt:

- Learn your cloud provider’s specific responsibilities, and understand what you own in your type of cloud environment.

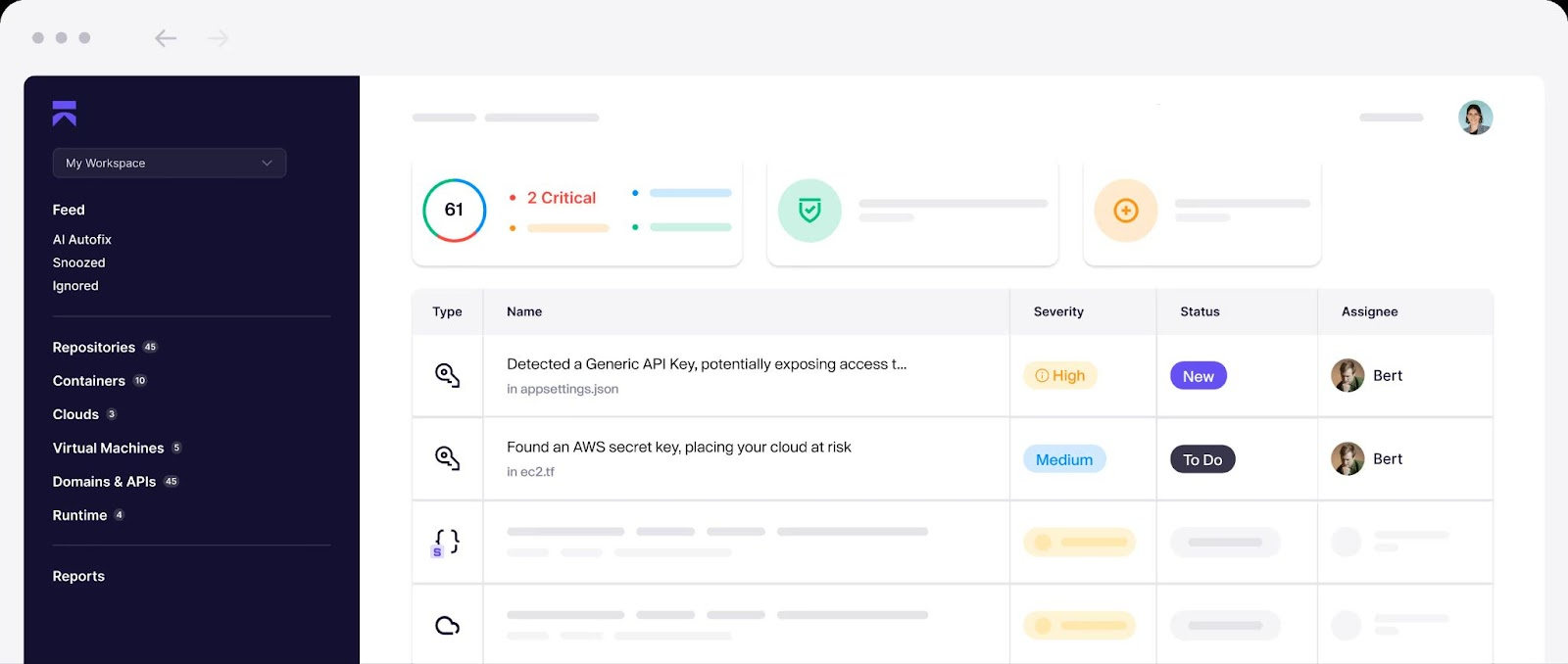

- Shift security left by equipping developers with developer-first security tooling that integrates directly into their workflow, protects against anti-patterns, vulnerable dependencies, and hardcoded secrets that slip into version control systems.

- Use the idea of blameless postmortems to learn from incidents without finger-pointing.

- Enforce guardrails with policy-as-code tools. Don’t worry, we will cover these recommendations in more depth later in this article.

2. Integrate Security with Compliance Mandates

You can’t mention governance without its compatriot: compliance. The two walk hand-in-hand. Governance sets the rules of the game, and compliance makes sure you’re playing by them.

But the problem here is that many organizations treat compliance as a paperwork exercise. Maybe yours do too.

Pass the audit, get the badge, and move on.

That mindset is dangerous. Compliance frameworks like GDPR or PCI DSS aren’t just hoops to jump through; they’re guardrails designed to protect sensitive data and reduce risk. When done right, they raise your baseline security posture. When done wrong, it’s a wasted effort.

Best practices to adopt:

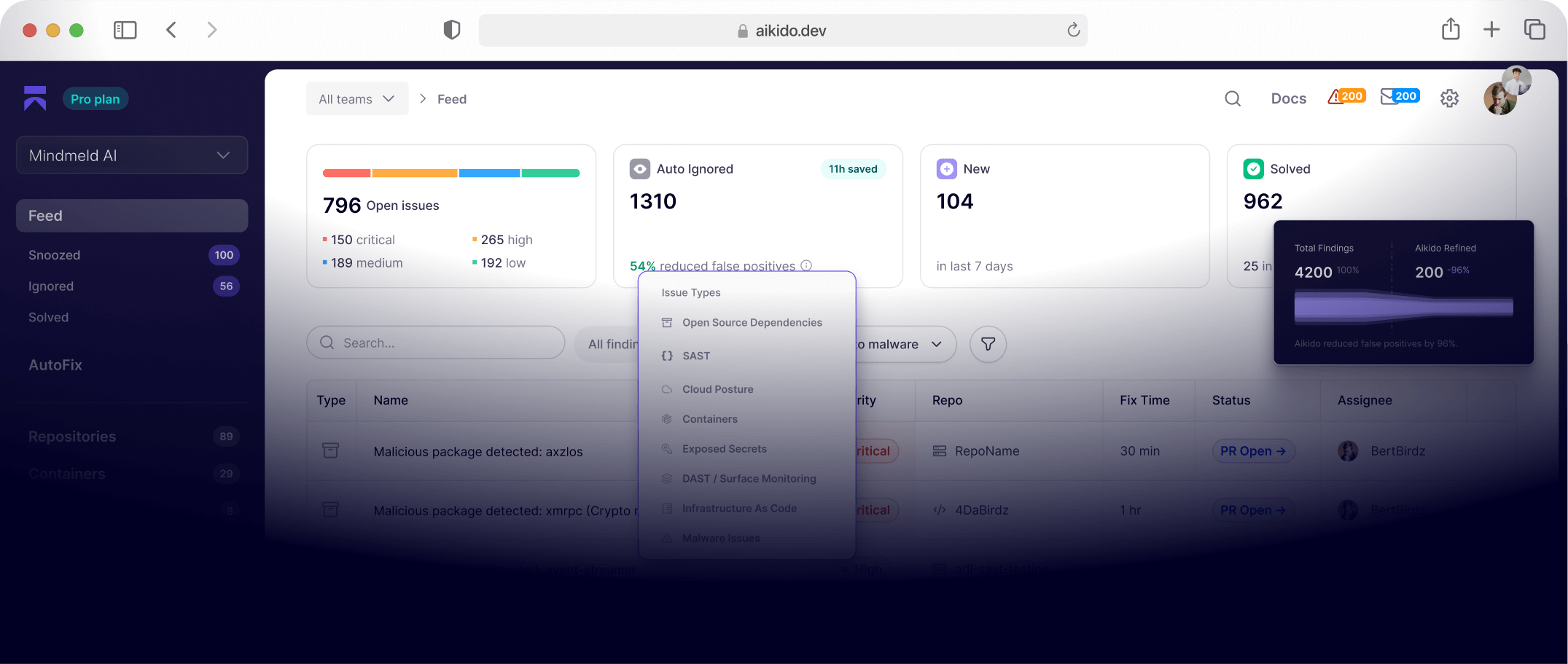

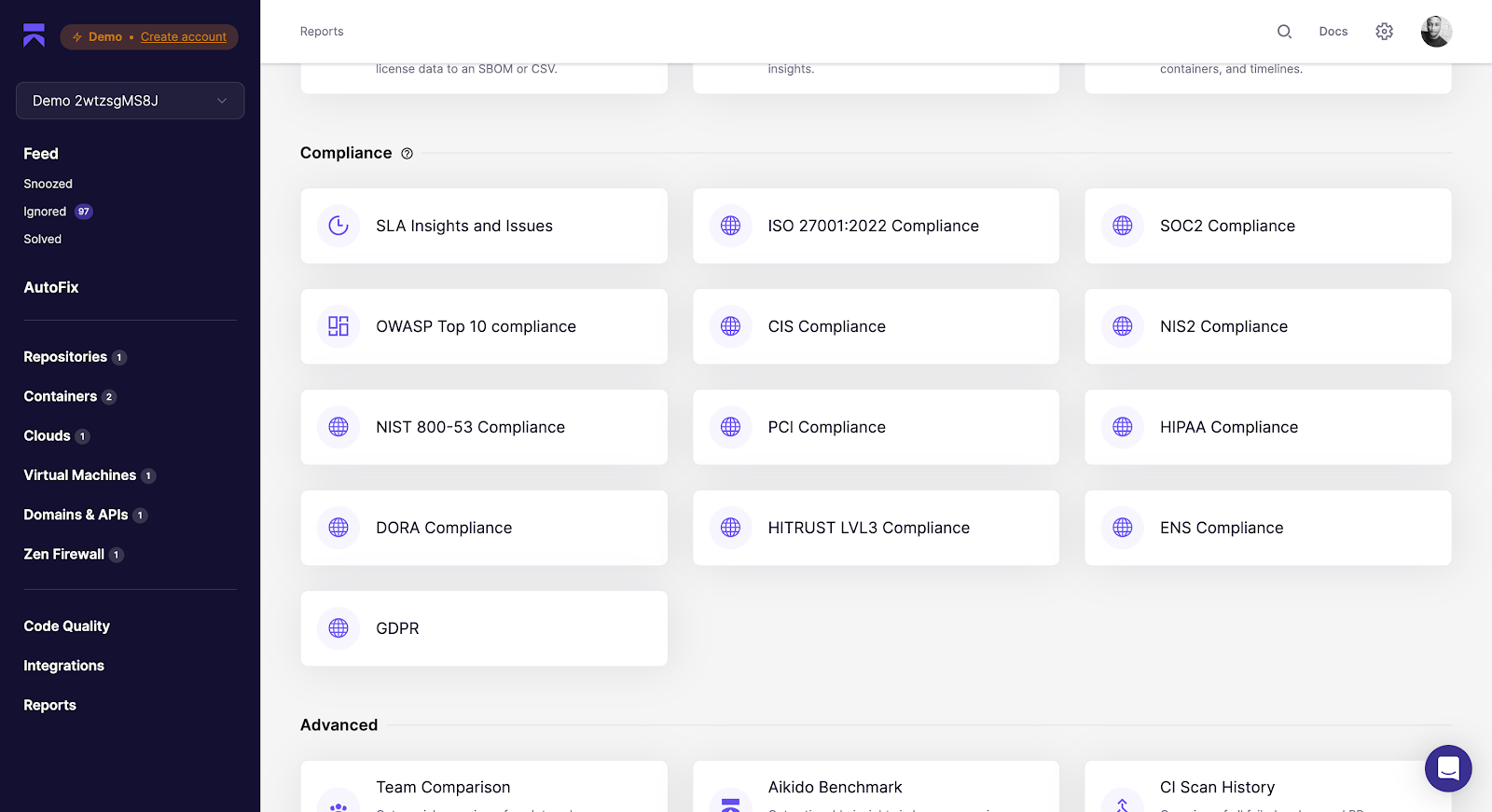

The key is to weave compliance into everyday workflows. Using the same tools you already use to secure your infrastructure. Some solutions help you by automating code and cloud security controls for ISO 27001, SOC 2 Type 2, PCI, DORA, NIS2, HIPAA & more.

Cloud Security Identity & Access Management Best Practices

With all the governance best practices covered, let's move on to one of the most challenging aspects of cloud security, which is managing identities and access.

3. Follow the Principle of Least Privilege

The first step to effectively managing identity access is to make sure every identity has access to what they need and nothing more. That is what the Principle of Least Privilege (PoLP) encourages.

It’s important to note that PoLP should also apply to non-human identities such as APIs, service accounts, containers, serverless functions, etc, which often run with overly broad permissions for convenience.

If compromised, those permissions can be abused just as easily as a human admin account. By applying PoLP to both humans and workloads, you shrink the blast radius of any potential breach.

Best practices to adopt:

- Default to “deny all,” then grant only the minimum actions required.

- Replace wildcards (s3:*) with explicit actions (e.g., s3:GetObject, s3:PutObject).

- Regularly audit and strip out unused permissions from IAM roles and service accounts. For example, instead of giving a Lambda function AmazonS3FullAccess, attach a custom IAM policy like:

{

"Version": "2012-10-17",

"Statement": [

{ "Effect": "Allow",

"Action": ["s3:GetObject", "s3:PutObject"],

"Resource": "arn:aws:s3:::my-app-bucket/*"

}

]

}This ensures the function can only read and write to the one bucket it actually needs, nothing else.

4. Use Multi-Factor Authentication (MFA)

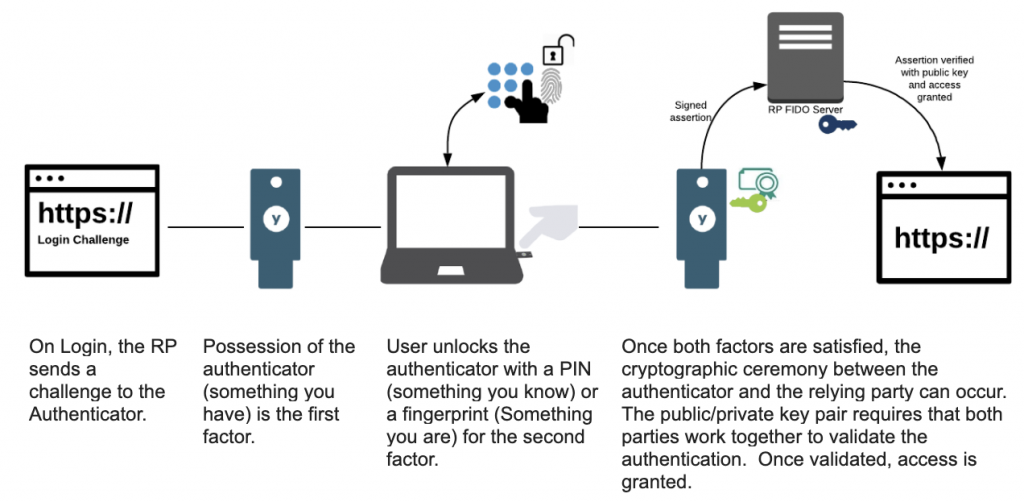

With every identity having only the access they need, it is still important to implement multi-factor authentication, especially for admin and critical accounts. MFA adds a second layer of protection beyond credentials.

Most multi-factor authentication today uses email-based authentication. Though it adds an extra layer of security as intended, they are easily prone to phishing.

Best practices to adopt:

- When implementing MFA, you should choose options that defend against phishing and other cyberattacks, like YubiKeys. These solutions offer physical key-based MFA, which would require an attacker to physically steal the key.

- Integrate hardware keys with your SSO provider (Okta, Azure AD, Google Workspace) for seamless adoption.

- Require multi-factor authentication for high-risk actions (e.g., accessing production environment, modifying IAM policies).

- Regularly audit MFA enrollment to ensure all accounts (including contractors) are covered and modified as business context changes.

5. Go Beyond RBAC with Policies

RBAC and regular IAM tools alone don’t solve challenges with managing identities in cloud-native environments, whether in the cloud, cluster, container, or code layer, especially for non-human identities like service accounts, API keys, certificates, and secrets.

Good cloud-native security practices require you to use Policy as Code (PaC) to enforce dynamic, fine-grained permissions tailored to specific scenarios.

Together, they create a layered access strategy:

- RBAC defines the who and what.

- PaC defines the when, how, and under what specific conditions.

For example, an engineer with the Platform Admin role (RBAC) can deploy to staging. But a PaC rule blocks deployment if the image isn’t scanned, the branch isn’t signed, or it’s outside business hours.

This combination enforces least privilege at scale, prevents missteps, and makes security repeatable, testable, and auditable.

Best practices to adopt:

If you use the AWS cloud, its ecosystem provides tools for proactive, preventive, detective, and responsive policy implementation. You should read this practical guide to getting started with policy as code on AWS.

Other cloud service providers like Azure also offer Policy as Code tooling. You can also consider open source PaC tooling like Open Policy Agent (OPA) and Kyverno, which is platform-agnostic and “cloud-native” by default.

6. Rotate Keys and Credentials Regularly

People wait until there is a break in before they change keys. You shouldn’t. You should automatically rotate keys regularly.

How soon is “regularly”? Monthly, quarterly, or yearly? The CIS AWS Foundations Benchmark recommends every 90 days or less.

In complex environments, less is more in key rotation, especially for non-human identities. Automating these keys more frequently significantly reduces the risk of a breach if a key is ever compromised because, in most cases, these types of identities have little to no visibility.

Best practices to adopt:

- Replace static credentials with short-lived tokens (e.g., AWS STS, GCP Workload Identity, Azure Managed Identities).

- Store and rotate secrets using a central manager (AWS Secrets Manager, HashiCorp Vault, Azure Key Vault) instead of code or config files.

- Monitor logs (CloudTrail, Audit Logs, Azure Monitor) for unusual credential use after rotation.

- Audit for unused and exposed keys and revoke them immediately. Live secret detection tools help you find any active secrets and their potential risks.

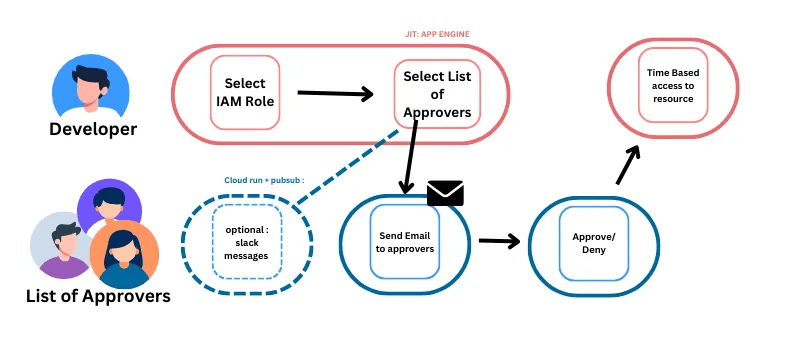

7. Leverage Just-in-Time (JIT) Access

There are times when a cloud user with minimal access would need elevated privileges to get their job done. Instead of elevating that user’s default access, you should implement Just-in-Time (JIT) access to grant temporary privilege elevation instead of standing permissions.

Best practices to adopt:

- A developer will request a role with the privileges they require, with:

- Okta Access Requests and AWS IAM Identity Center on AWS

- Just-in-time machine access on Azure

- Privileged Access Manager on Google Cloud or other external tooling

- Always set time limits (e.g., 30 minutes, 1 hour, 1 day) for elevated sessions; no open-ended approvals.

- Require MFA re-authentication before granting JIT access.

- Log and monitor all JIT requests and approvals for auditability.

Cloud Security Data Protection Best Practices

Data is the lifeblood of any business. In fact, if you’re asked to choose between a breach in your servers or a breach in your database, you will choose your servers all day long. That's how valuable your data is to you.

So, how do you secure your data on the cloud?

8. Encrypt Data in Transit and at Rest

Whether in storage or in transit, you should encrypt your cloud data. At rest, use strong, modern encryption algorithms like AES-256 or TDE (Transparent Data Encryption). This ensures that even if an attacker gains access to the underlying storage, the data remains unreadable without the necessary encryption keys.

For data in transit, all communication, including API calls and inter-service traffic, should be secured using protocols like TLS/SSL. In a zero-trust environment, you should implement mutual TLS (mTLS) as it ensures that the workloads/identities at each end of a network connection are who they claim to be by verifying that they both have the correct private key.

Cloud data encryption hinges on a strong Key Management System (KMS). Your KMS should be a secure, centralized service for generating, storing, managing, and rotating encryption keys.

Best practices to adopt:

- Encrypt all storage volumes, databases, and object storage (e.g., AWS S3 SSE, Azure Storage Service Encryption, GCP CMEK).

- Enforce TLS 1.2+ for all API endpoints and inter-service traffic.

- Implement mTLS for internal microservices to prevent impersonation.

- Centralize key handling with a managed KMS (AWS KMS, Azure Key Vault, GCP KMS, HashiCorp Vault).

- Automate key rotation and monitor for unauthorized key usage.

The below Kubernetes Ingress snippet enforces mTLS by requiring clients to present a valid certificate from a trusted CA (client-ca) before they can access the service.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: app-ingress-mtls

annotations:

kubernetes.io/ingress.class: nginx

nginx.ingress.kubernetes.io/auth-tls-secret: "prod/client-ca" # client CA cert

nginx.ingress.kubernetes.io/auth-tls-verify-client: "on" # require client cert

spec:

tls:

- hosts:

- secure.example.com

secretName: secure-app-tls

rules:

- host: secure.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: app

port:

number: 80

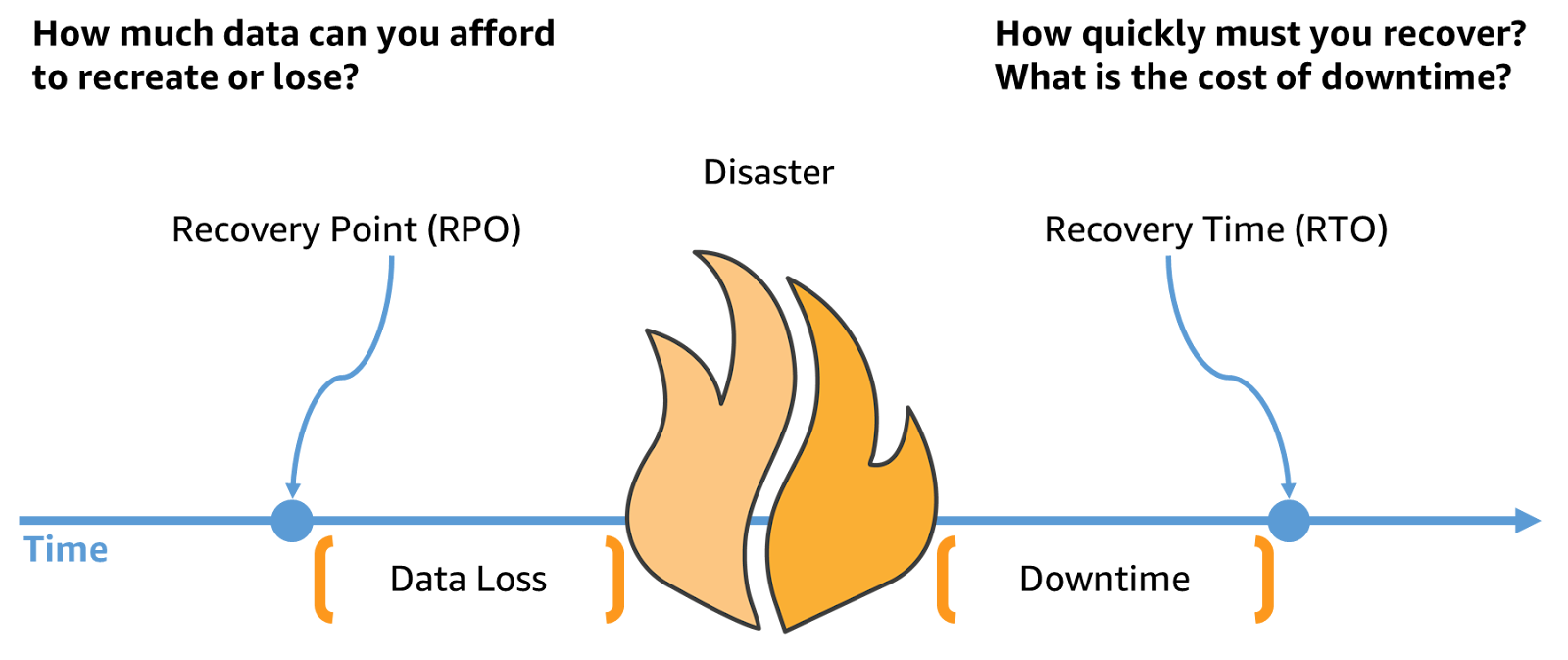

9. Back Up Data and Test Recovery

There are primarily two golden rules for taking a backup of data: the 3-2-1 rule and the 3-2-1-1-0 rule.

3-2-1 recommends:

- 3 = Maintain Three Copies of Your Data

- 2 = Use Two Different Types of Storage Media

- 1 = Store One Copy Offsite

3-2-1-1-0 builds on 3-2-1 to protect against modern threats and recommends you:

- 3 = Maintain Three Copies of Your Data

- 2 = Use Two Different Types of Storage Media

- 1 = Store One Copy Offsite

- 1 = Store One Copy Offline or Immutably

- 0 = Ensure Zero Backup Errors

The main question is not if you take backups, but whether you can recover with those backups. Many teams assume backups are safe until disaster strikes, only to discover corrupted files, missing data, or recovery processes that take days instead of hours.

Testing backups may feel unnecessary when everything’s running smoothly, but outages don’t happen on schedule.

Imagine your heart rate trying to recover with a tested backup and an untested backup during a major outage.

Best practices to adopt:

- Encrypt backups at rest and in transit.

- Run regular recovery drills to establish and validate recovery point objectives (RPO) and recovery time objectives (RTO).

- Document recovery procedures so they can be executed under pressure.

- Rotate and clean up old backups to reduce attack surface and cost.

You are good to go!!

10. Classify and Label Sensitive Data

You can’t protect what you don’t know you have. In most cloud environments, sensitive data is spread across S3 buckets, databases, message queues, and even logs. Without proper classification, it’s impossible to apply the right controls.

By tagging and labeling data based on sensitivity—public, internal, confidential, restricted—you create visibility and enforce guardrails that scale.

Many cloud providers support built-in classification tools (e.g., AWS Macie, Azure Information Protection, GCP DLP). Once data is labeled, you can enforce encryption, access restrictions, and monitoring automatically.

Best practices to adopt:

- Scan storage buckets and databases for PII, credentials, and financial data.

- Apply metadata tags or labels (e.g., sensitivity=confidential) to trigger policies.

- Automate classification with cloud-native tools or third-party scanners.

- Restrict access to “restricted” data classes to only approved roles.

11. Apply Tokenization and Anonymization

Sometimes protecting data means transforming it so it’s useless if leaked. Tokenization replaces sensitive fields (like credit card numbers) with non-sensitive placeholders, while anonymization removes identifying information from datasets altogether. Both approaches reduce exposure without halting business workflows.

These techniques are especially critical in environments where developers, analysts, or third parties need access to datasets without seeing the raw sensitive values. Done right, tokenization and anonymization let you balance security with usability.

Best practices to adopt:

- Tokenize payment details before storing them. You can use a PCI-compliant vault.

- Apply anonymization for analytics datasets by masking PII (e.g., names, emails).

- Use format-preserving tokenization so systems still validate data formats.

- Automate transformations at ingestion points to ensure raw data never enters logs or non-secure systems.

Cloud Security Network & Infrastructure Best Practices

To build secure and reliable systems, organizations must strengthen the foundation of their cloud environments. This means adopting best practices for network design, connectivity, and infrastructure management.

This is how you’ll do it:

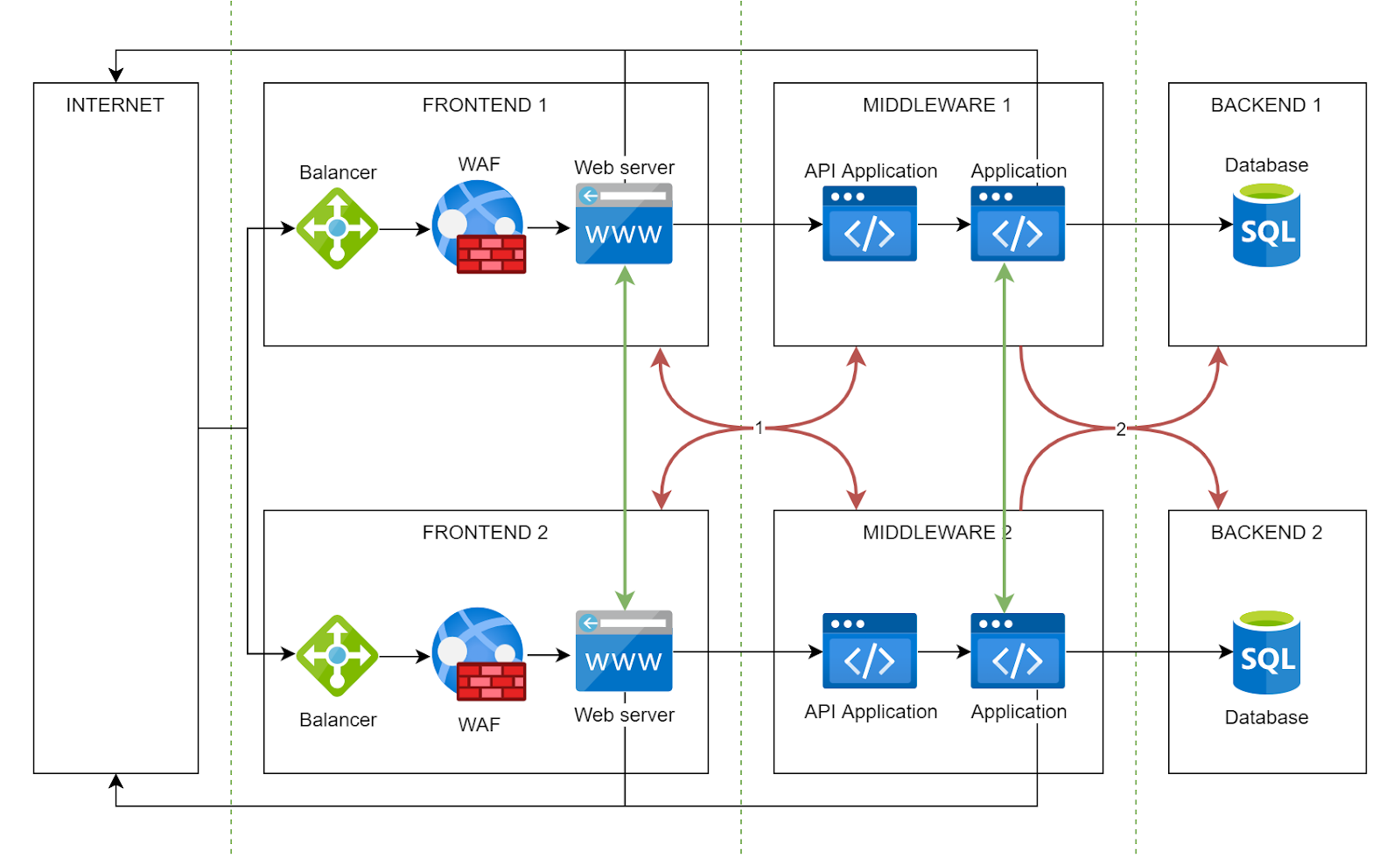

12. Implement Network Segmentation

Flat networks are fragile. If every resource in your cloud sits on the same network, a compromise in one resource will give attackers free rein across your cloud. This is why you should implement network segmentation for your cloud security strategy.

Best practices to adopt:

The goal is microsegmentation. Isolating workloads based on their sensitivity and function enforces zero-trust, where every connection is treated as a potential threat. You can achieve this using tools like network security groups, private VLANs, and internal firewalls.

In the above network segmentation illustration, there is internet access in the FRONTEND and MIDDLEWARE segments, but access between the FRONTEND and MIDDLEWARE segments of different information systems is prohibited.

This foundational layer ensures that even if a misconfiguration or vulnerability is exploited in MIDDLEWARE 1, the blast radius is minimal, protecting other MIDDLEWARES.

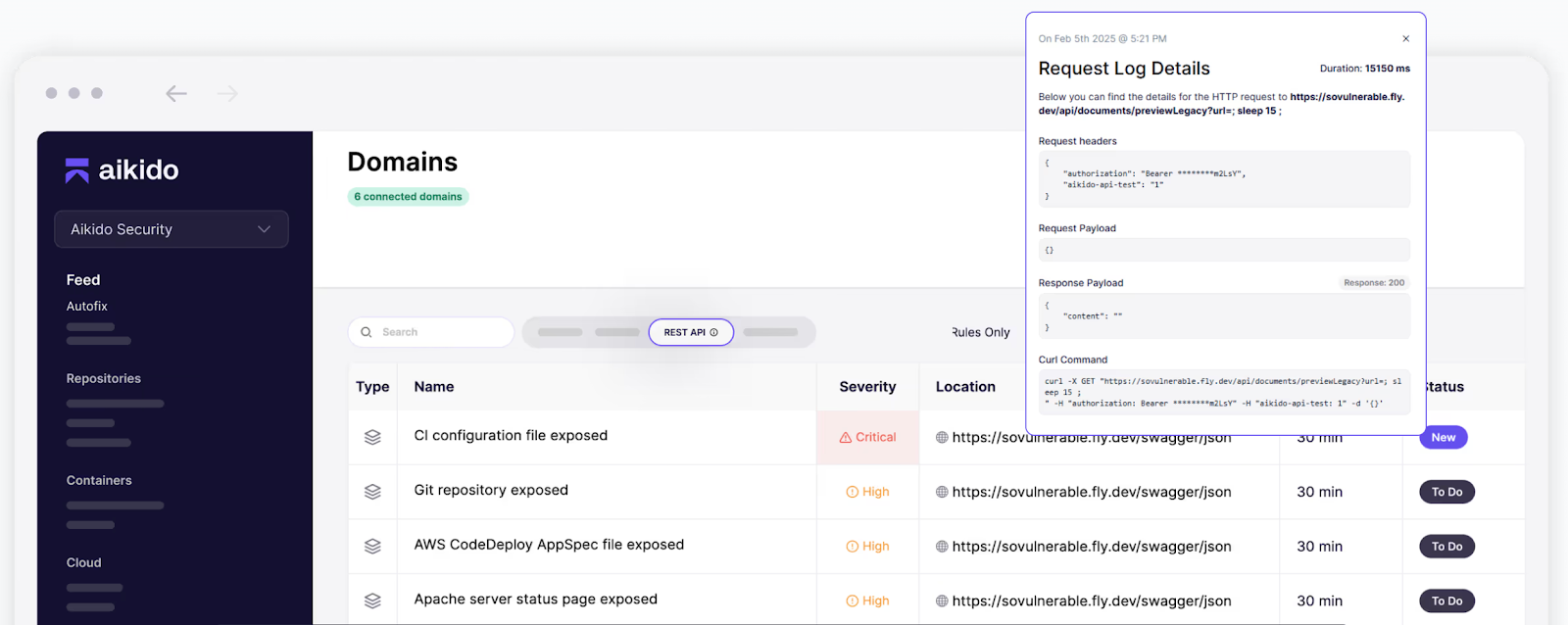

13. Secure API Endpoints

APIs are the nervous system of cloud applications. And the fastest way to kill an organism (applications in this case) is to attack its nervous system.

It is priority 1 to ensure that all APIs in your environment have proper authentication and authorization mechanisms, so threat actors can't exploit them to gain unauthorized access or disrupt services.

Best practices to adopt:

- Put all external APIs behind a gateway (Kong/Apigee/AWS API Gateway) with per-route policies.

- Require OAuth2/OIDC; issue short-lived, least-privilege scopes (no wildcard claims).

- Apply rate limits/quotas per API key/client and stricter limits on high-cost routes.

- Enable TLS 1.2+ everywhere; use mTLS for internal microservice calls.

- Auto-discover and tag APIs; block or decommission undocumented and deprecated versions.

- Ship API logs to your SIEM; alert on 401/403 spikes, unusual data pulls, or enumeration patterns.

- And don’t forget to consistently scan your APIs for vulnerabilities and flaws.

As mentioned, API security is core to your cloud security strategy. For more guidance and hands-on tutorials, check out our other blog posts:

- API Security Testing: Tools, Checklists & Assessments

- API Security Best Practices & Standards

- Top API Scanners in 2025

- The Future of API Security: Trends, AI & Automation

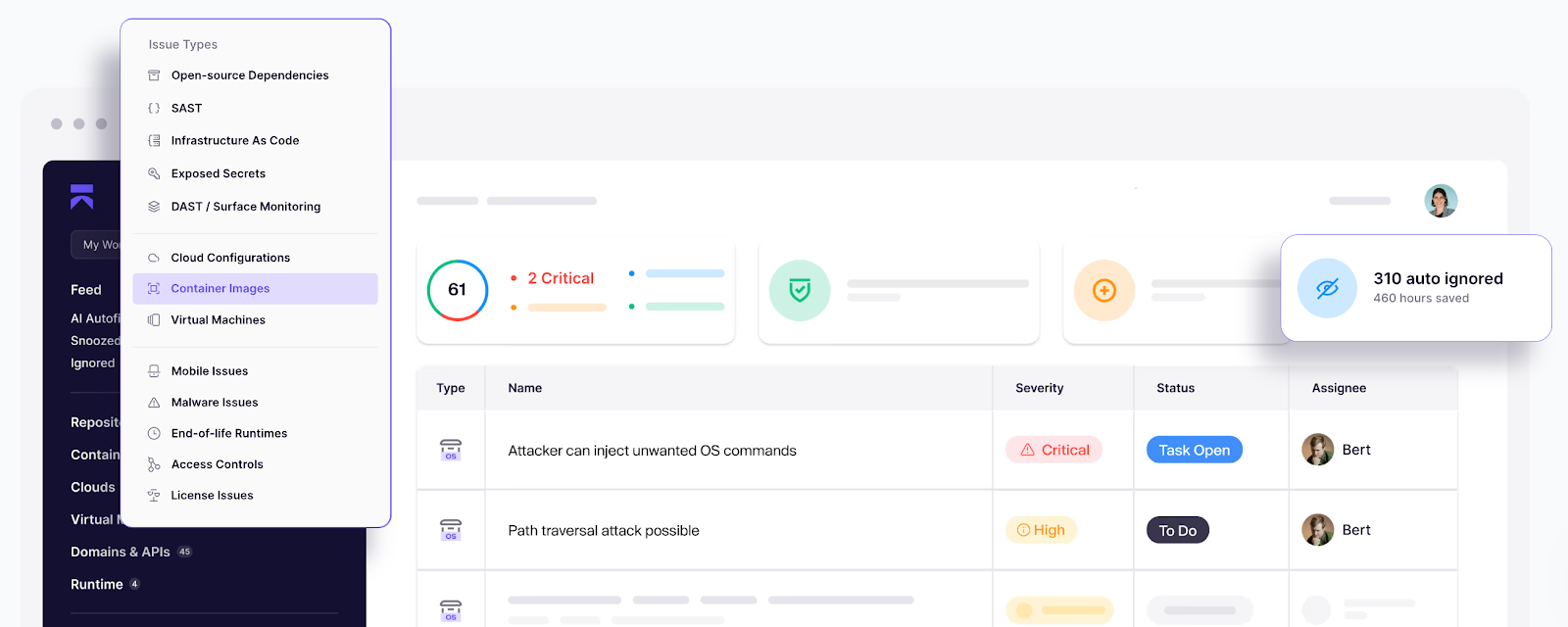

14. Secure Containers

Every organization wants the benefits of going cloud-native. But are they ready for its challenges too? Are you ready?

Containers are the backbone of cloud-native infrastructure, but they also bring unique risks. Misconfigurations, vulnerable base images, and excessive privileges can all open the door to attackers.

The good thing is that most container security risks and issues can be tackled by following the recommended best practices.

Best practices to adopt:

- Always use minimal, verified base images (e.g., distroless, Alpine) from trusted sources. Use specific image versions.

- Drop unnecessary Linux capabilities (CAP_SYS_ADMIN is almost always a red flag).

- Never run containers as root; if, in an edge case, a container requires root access, re-map the container UID to a less-privileged user on the host.

- Monitor runtime activity to detect and respond to abnormal behavior in real-time.

- Automatically scan images and their repositories to find and fix vulnerabilities in the open-source packages used in your base images and Dockerfiles.

Suppose you use Kubernetes to orchestrate your containers. In that case, you can configure admission controllers to intercept requests to the API server, e.g, a deployment, and validate that certain security conditions are met before deployment.

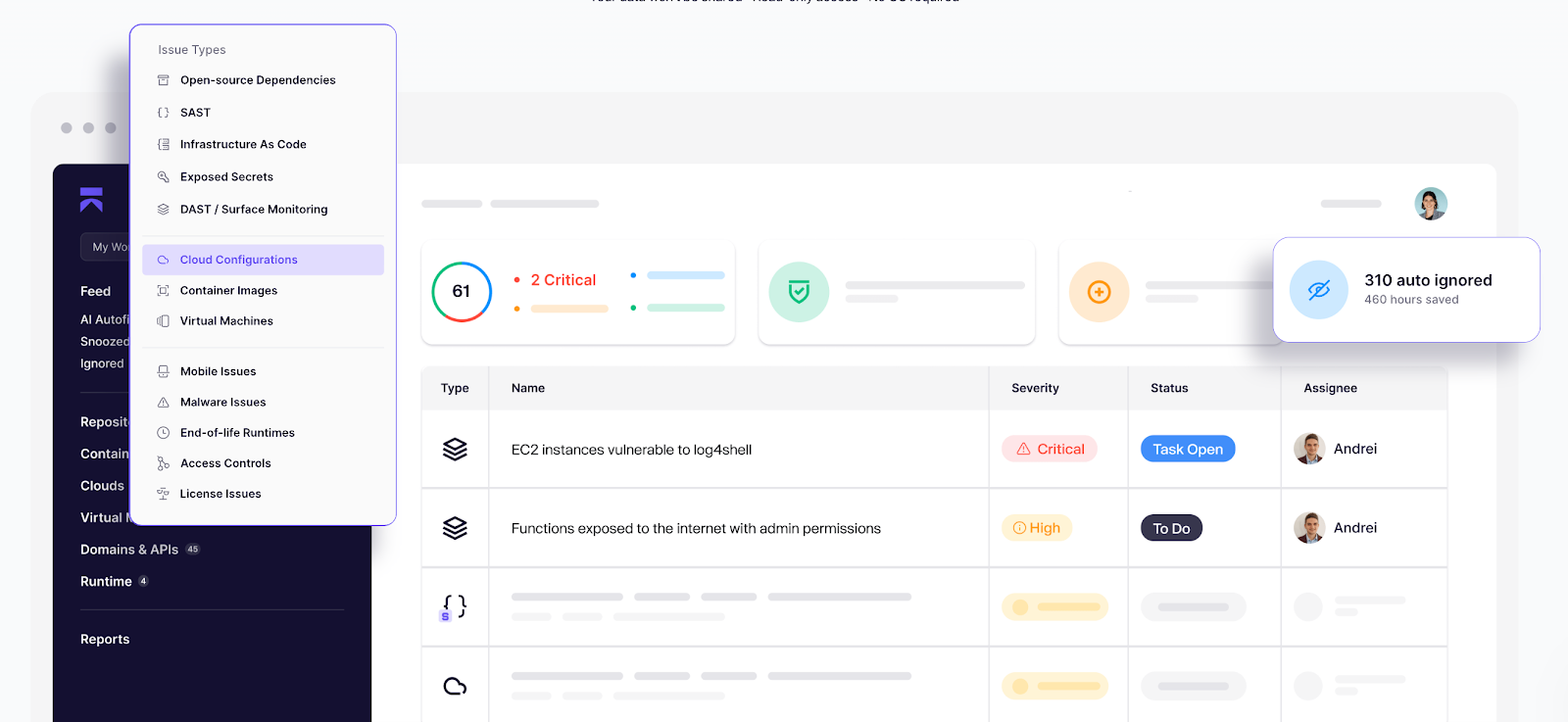

15. Adopt Secure Configuration Baselines

Default settings are built for convenience, not safety. To keep your infrastructure safe, lock down the OS, container runtime, and cloud services with opinionated baselines so you’re not reinventing hardening every sprint.

Best practices to adopt:

- Start from CIS Benchmarks (specific OS, Kubernetes, Docker, cloud providers) and treat them like code: versioned, reviewed, enforced via Infrastructure as code (IaC).

- Enforce configs with policy-as-code as highlighted earlier (OPA, Kyrveno, Terraform Sentinel, Azure/AWS/GCP Policy).

- Turn on secure defaults: SSH hardening, auditd, kernel params, no-root containers, read-only filesystems.

With all the above set, continuously detect misconfigs, exposures, and policy violations across all your clouds and fix them fast.

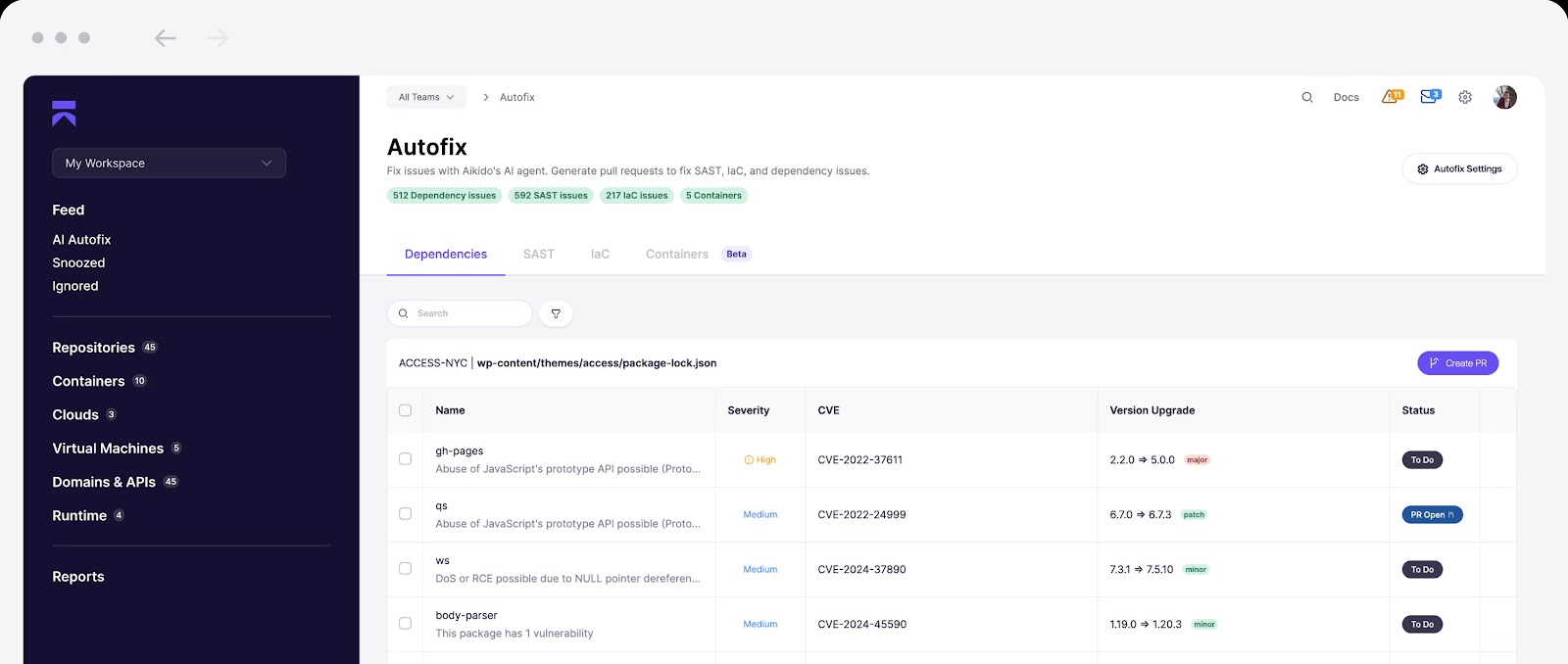

16. Regularly Apply Software Updates and Security Patches

Let’s face it: unpatched = vulnerable.

The general recommendation is for you to make sure every resource in your infrastructure is updated to a stable version and patched.

But how can you do that at scale with 100s of services and tooling?

This is where AI with human in the loop shines to gain visibility in your infrastructure and auto-fix issues.

Best practices to adopt:

- Generate and track SBOMs with tools that can auto-create tickets for issues that require your intervention.

- Subscribe to CVE feeds that are verified by security researchers to stay up to date on the latest supply chain threats.

- Rebuild images weekly from patched base images; pin digests, not tags.

- Use maintenance windows + canary rollouts; measure error rates and rollback fast.

- Keep managed services on supported engine versions (DBs, runtimes, gateways).

17. Use Web Application Firewalls (WAFs) and DDoS Protection

WAFs filter the junk, and DDoS shields keep you online when traffic turns hostile. Put them in front of APIs/apps to block SQLi/XSS and throttle L7 floods, then pair with rate limits and bot controls for the gray-area abuse that slips past signatures.

If you recall the network segmentation illustration above, there is a WAF between the frontend load balancer and the web server.

Best practices to adopt:

- Deploy a WAF (Aikido Zen/AWS WAF/Azure WAF/Cloud Armor) with a tuned rule set (OWASP CRS + custom).

- Turn on DDoS protection with automatic mitigation.

- Inspect JSON bodies (API mode), validate schemas, and log all blocks to your SIEM.

- Run chaos drills to simulate spikes and confirm that autoscaling and WAF/DDoS policies are effective.

Cloud Security Threat Detection & Monitoring Best Practices

Detecting and responding to threats quickly is essential for reducing the impact of security incidents in the cloud, which makes proactive monitoring and detection best practices a critical part of cloud security.

18. Implement Advanced Monitoring and Logging Tools

Logs are your early warning system. Only if you actually centralize and analyze them, though.

In the cloud, events scatter across services: API calls, VM activity, Kubernetes audit logs, network flow data.

Pull them together into a SIEM or data lake, then build dashboards with open source visualization tools like Grafana and alerts so nothing gets ignored.

Best practices to adopt:

- Enable cloud-native logging with services like AWS CloudTrail, GuardDuty, VPC Flow Logs, Azure Monitor, and GCP Cloud Audit Logs.

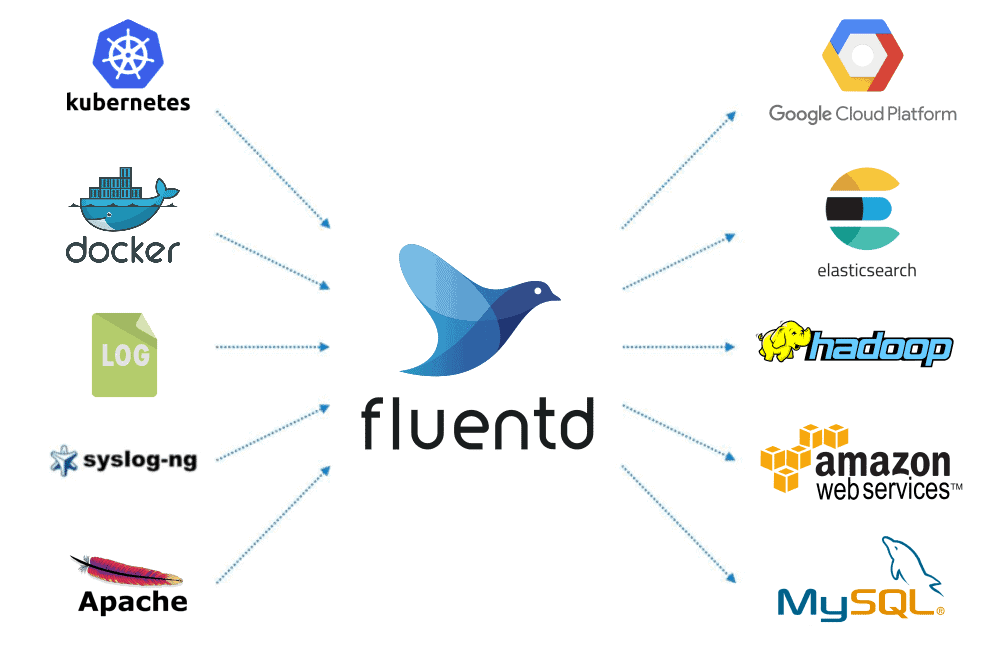

- Stream logs into a centralized location for correlation. You can and should use a data collector to build a unified logging layer. One of the most robust is Fluentd, which is open source and has 500+ plugins for connecting data sources and outputs while keeping its core simple.

- With that done, define alerts for high-risk actions (IAM changes, new public buckets, privilege escalations).

- Set log retention policies that meet compliance and forensic needs.

19. Use a Dependency Graph for Vulnerability Assessments

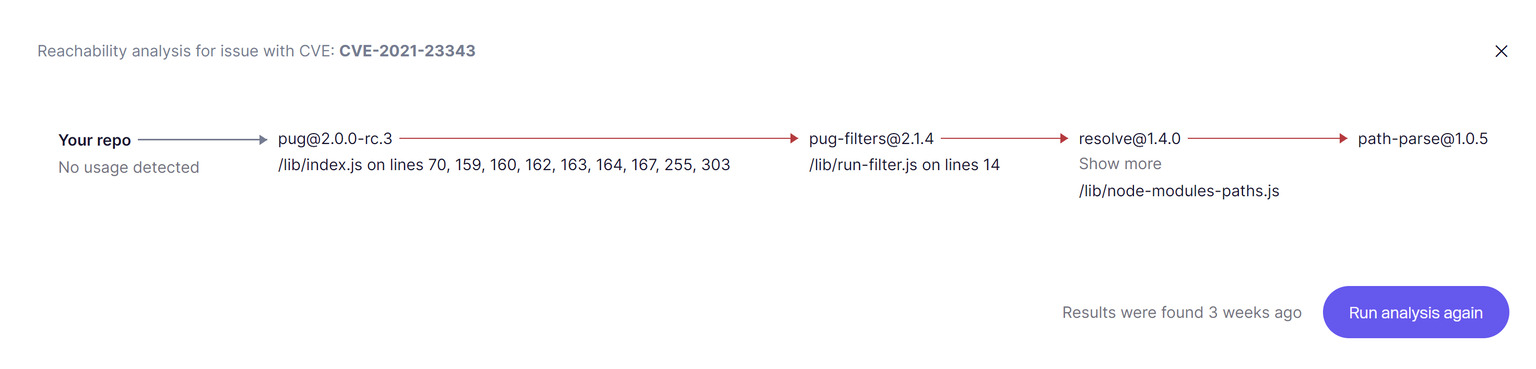

Since the beginning of the guide, we’ve mentioned automatically finding vulnerabilities. It is also important to know that not all vulnerabilities are worth fixing. What matters is knowing which ones actually pose a risk in your deployed environment.

That’s where a dependency graph becomes essential. Without it, you’re blind, drowning in CVEs that don’t matter, while missing the ones that do.

Best practices to adopt:

- Use a platform that offers reachability analysis to eliminate false alarms and flag only exploitable vulnerabilities.

- Ignore dev/test-only dependencies; focus on what ships to prod.

- Prioritize CVEs that are both reachable and internet-exposed.

- Correlate vulnerabilities across code, containers, and cloud configurations

20. Beware of Vibe Coding

Vibe coding is the shiny new thing.

Designers, marketers, sales folks, or anyone can now spin up apps or features without writing much code themselves. This often means without testing, reviewing, or considering security. It’s fast, frictionless, and feels magical. But magic without guardrails tends to burn.

To “vibe code” more safely, the best practices to adopt are:

- Treat AI-generated or non-engineer shipped code like a junior developer wrote it: always code review. Get “eyes” on it.

- Don’t roll your own auth, input validation, or secrets management; use well-reviewed, audited libraries or services.

- Keep secrets out of frontend and repos; use secure storage and environment management.

- Make sure you automate scans (dependency, SAST, DAST) against vibe-coded apps before deploying. Let pipelines catch low-hanging fruit.

Want more practical steps? Read our Vibe Coders’ Security Checklist.

21. Continuous Pentesting in CI/CD

Continuous pentesting flips periodic security checks on their head, embedding automated tests into your build and deployment pipeline so vulnerabilities get caught before they reach production. It’s about speed, context, and cleaner feedback loops.

Best practices to adopt:

- Set up SAST and secrets scanning on every pull request.

- IaC scans in your CI (scan Terraform scripts, CloudFormation, and Helm) before deployment.

- Fail builds for high/critical severity vulnerabilities; flag medium findings in the dashboard for backlog.

- Have a team or individual “owner” for each class of finding (code, infra, cloud) with documented SLAs.

- Conduct regular “pentest retrospectives” to review findings, false positives, and tune tooling.

Cloud Security Operational & Resilience Best Practices

Cloud security is not just about prevention; it also requires preparing for disruptions and ensuring business continuity, which is why operational excellence and resilience practices are key.

22. Establishing Incident Response Plans

You remember the cliche saying “If you don’t plan, you plan to fail”; well, the same is true in the world of security.

You see, it's not about whether incidents will occur, because they will occur; it's about how you respond when they happen and what you do after the fact.

What makes a solid incident response plan?

- You need to draw clear lines for what counts as an incident (data breach, service outage due to malware, etc.). Without that, there’s always confusion.

- Name who does what, be it developers, tech leads, communications, or legal. Also, include backup contacts.

- Internal and external. Who needs to know? When? And how?

- Lay out a step-by-step flow: detection → assessment → containment → eradication → recovery → lessons learned. Include criteria for how bad an incident must be before it’s escalated (severity tiers).

- If logs need preserving, systems quarantined, or external help required, the plan should include how to preserve evidence.

- Conduct tabletop or simulation drills at least annually to walk through the plan and identify any gaps.

23. Enforce a Zero Trust Security Model

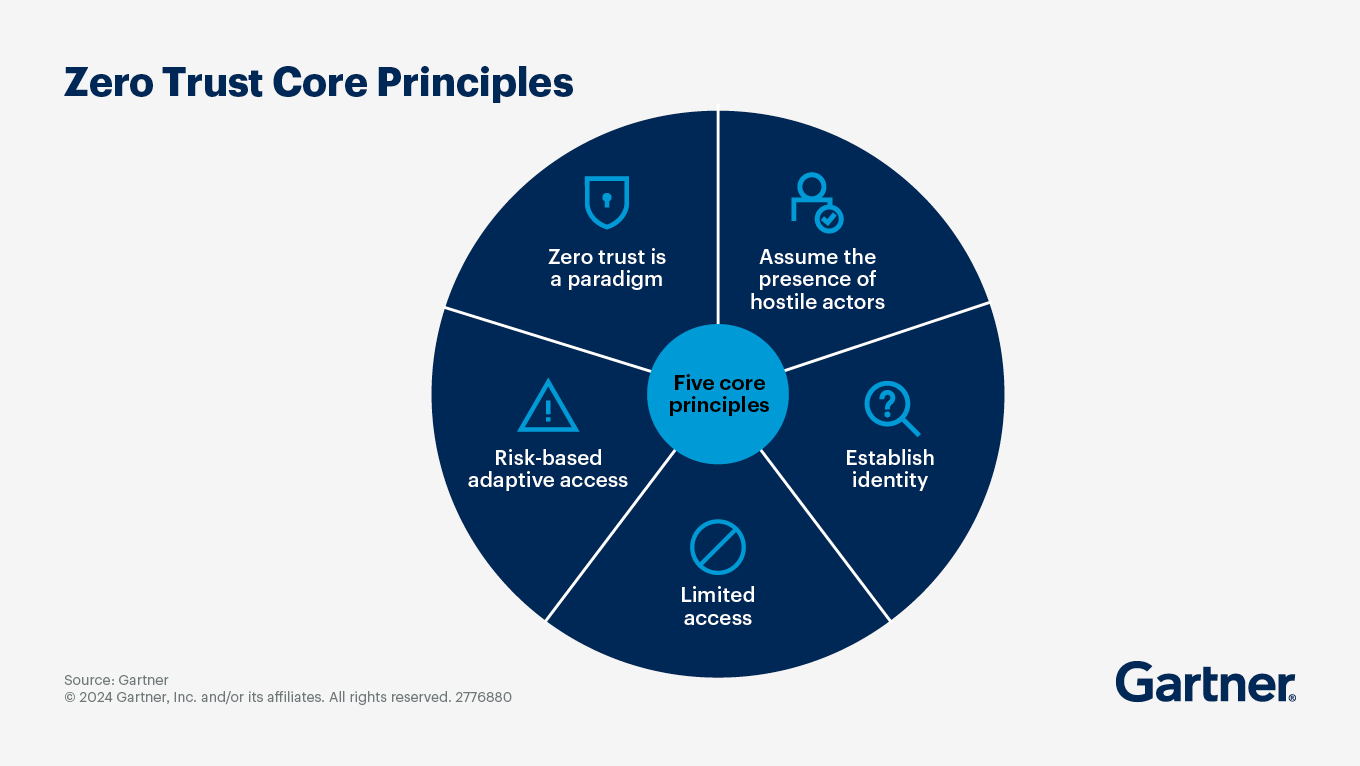

So far, we’ve mentioned zero-trust a few times in this guide. Zero-trust isn’t a product you buy; it’s a mindset: never trust, always verify. In the cloud, where networks are flat and identities are the new perimeter, this model matters more than ever.

Instead of assuming users or services inside your environment are safe, zero-trust forces every request, human or machine, to prove itself. That means strong authentication, least-privilege authorization, encrypted connections, and continuous validation. If an attacker does slip in, zero-trust stops them from moving laterally.

Best practices to adopt:

- Require MFA and strong identity checks for every user and workload.

- Enforce least privilege with granular RBAC/ABAC policies.

- Use network micro-segmentation and service identity, such as SPIFFE/SPIRE, to verify machine-to-machine traffic.

- Encrypt all traffic with TLS/mTLS, even inside “trusted” VPCs or clusters.

- Continuously monitor behavior and revoke sessions if anomalies appear.

24. Leverage Cloud Access Security Brokers (CASBs)

The average organization uses a ton of SaaS apps, usually for good reason. When you are trying to move quickly, you do not want to spend good engineering hours reinventing the wheel. The challenge is that many of them are adopted without security oversight (shadow IT), creating blind spots.

A Cloud Access Security Broker (CASB) gives you a central checkpoint: visibility into which apps are being used, what data flows through them, and whether usage complies with policy.

CASBs enforce controls across SaaS environments. Measures include preventing sensitive data from being uploaded to personal drives, requiring encryption for file sharing, and blocking logins from risky locations. They act as a security “glue” between your users, SaaS apps, and existing IAM and DLP policies.

Best practices to adopt:

- Deploy a CASB in proxy or API mode to monitor SaaS usage across your org.

- Identify shadow IT by discovering unauthorized apps and blocking risky ones.

- Enforce DLP policies that prevent sensitive data from leaving sanctioned apps.

- Require context-aware access (device health, geolocation, risk score) before granting SaaS access.

- Integrate CASBs with your SIEM/SOAR for incident detection and automated response.

25. Develop a Strong Culture of Security Awareness

All these best practices we covered in this article will be futile if the humans who are to implement and live by them neglect them. The saying “a chain is only as strong as its weakest link” extends to the individuals in your organization as well.

While you might not want to bore your team with mandatory seminars that take valuable time that could be spent iterating on your goals, you also want to strike a balance of constantly assessing your security posture and ensuring that your teams understand the impact being security conscious has.

Best practices to adopt:

- Ongoing training, phishing simulations, and rewarding secure behavior.

- Make security part of code reviews; don't just check for bugs, but also for hardcoded secrets, overprivileged API calls, and exposed endpoints.

- Gamify security awareness by incorporating leaderboards for those who catch the most vulnerabilities and rewards for reporting security issues.

- Blameless postmortems for security incidents. If people get fired for honest mistakes, they'll just hide them better next time.

- Threat modeling sessions get developers thinking like attackers during the design phase, not after the code ships.

Shift Left, Stay Ahead, Ship With Confidence

With all this said, it is important to understand that security is more of a journey rather than a destination. A few short years ago, if you told us at Aikido that “vibe coding security ” would be words in the same sentence, you would get some strong looks. However, we adapt and take proactive steps to ensure you don’t end up on the front page for a security breach.

That’s essentially why we built Aikido: to help you shift left, catch misconfigurations early, and keep your developers moving fast without trading off safety.

Your question now might be: So, how do I start?

The answer is, you already have. By reading through these best practices and taking steps toward stronger cloud security. The next step is simple: book a demo with our team and see how Aikido can take the heavy lifting off your shoulders, so you can focus on what matters most: shipping with confidence!

Read More articles from our series about Cloud Security:

Cloud Security: The complete guide

Cloud Application Security: Securing SaaS and Custom Cloud Apps

Cloud Security Tools & Platforms: The 2025 Comparison