Generative AI and autonomous pentesting are transforming the industry. Unlike legacy 'automated pentesting,' these methodsintroduce a new way of working — continuously simulating real attack paths with AI agents. It promises to automate tedious tasks, create custom exploits, and simplify technical jargon. But here’s the truth: while AI can supercharge security testing, it’s not a cure-all. Companies using AI in their security save an average of $1.76 million per breach, according to IBM's 2023 Cost of a Data Breach Report, showing its real-world value. At the same time, AI-powered cyberattacks are rising, making smart, adaptive testing critical.

Think of AI as a skilled intern who’s read every security blog but still needs guidance. It’s great at spotting patterns and rapid analysis but struggles with creativity and business context.

This guide breaks down where generative AI adds value in penetration testing-and where human expertise is still essential. For more details, check out our deep dive on Best AI Pentesting Tools, covering platforms pushing the limits of automated security.

TL;DR

Generative AI accelerates routine pentesting tasks like vulnerability analysis, payload creation, and report generation, making security assessments faster and more scalable. However, it struggles with complex business logic, creative attack chains, and nuanced risk assessment. The sweet spot lies in combining AI automation with human oversight for maximum effectiveness.

The AI Revolution in Security Testing

Imagine having a security analyst who never sleeps, processes thousands of vulnerabilities per minute, and can explain complex technical issues in simple terms. That's essentially what generative AI brings to penetration testing. According to Gartner's predictions, over 75% of enterprise security teams will incorporate AI-driven automation into their workflows by 2026.

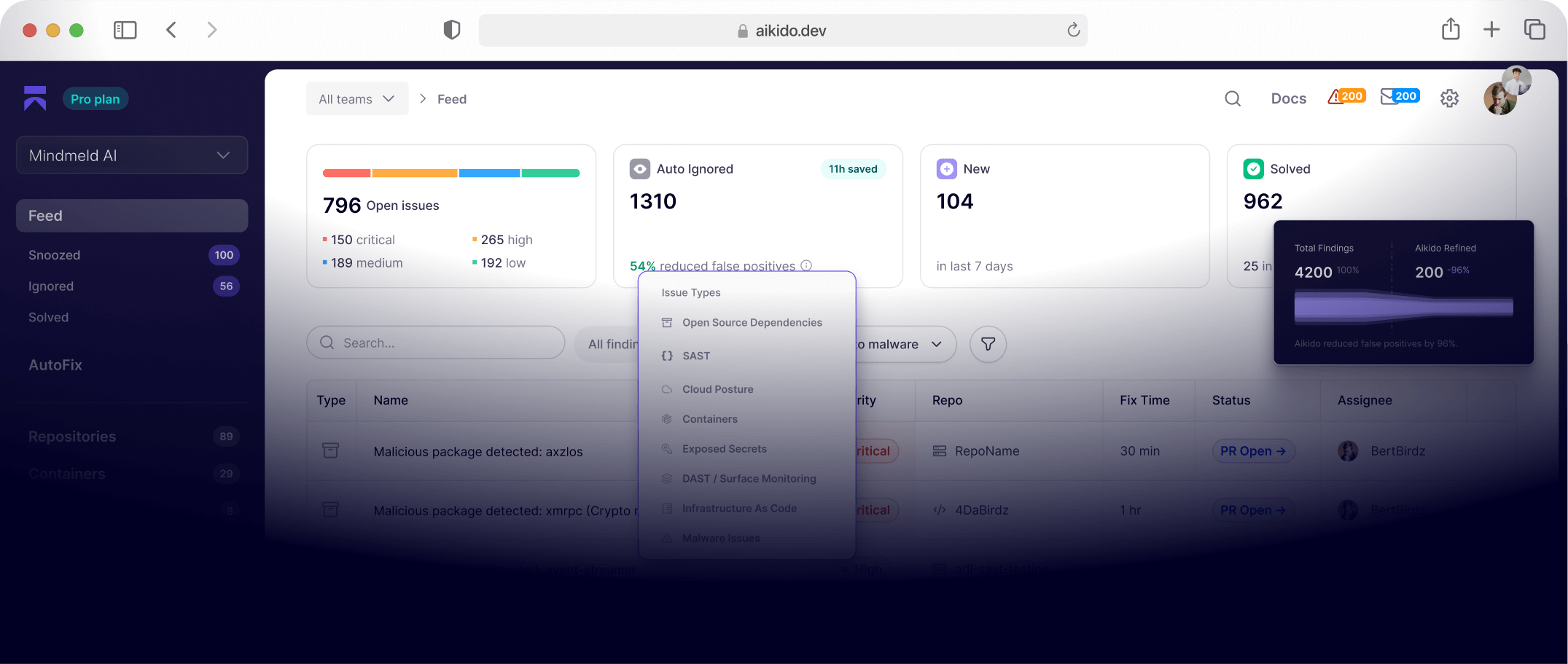

Unlike traditional rule-based scanners that follow predetermined scripts, AI-powered tools adapt and learn. If you want to see how this looks in practice, explore our AI SAST & IaC Autofix features, which harness machine learning for proactive vulnerability remediation. These capabilities can even support continuous pentesting setups, as discussed in Continuous Pentesting in CI/CD.

But speed and adoption don't automatically equal success-you need to understand what AI does well and where it falls short.

Where Generative AI Shines in Pentesting

Smart Vulnerability Analysis

Traditional vulnerability scanners dump hundreds of findings on your desk without context. AI changes the game by analyzing each vulnerability within your specific environment and explaining what actually matters.

Instead of seeing “CVE-2024-1234: SQL Injection - High Severity,” AI-powered tools provide:

- Business impact explanation: “This SQL injection could expose customer payment data in your e-commerce database”

- Exploitability assessment: “Confirmed exploitable through the /api/login endpoint with current configurations”

- Prioritized remediation steps: “Fix by updating the authentication library to version 2.1.4 or implementing parameterized queries”

This contextual analysis transforms overwhelming vulnerability reports into actionable security roadmaps. Teams report reducing remediation time by up to 60% when using AI-enhanced vulnerability management, supported by Forrester's research on application security automation.

Our Static Code Analysis (SAST) scanner applies this context-driven approach, making it easier to pinpoint the vulnerabilities that actually matter.

Custom Payload Generation

Gone are the days of relying on static payload libraries that defenders easily recognize. Generative AI creates custom attack vectors tailored to your specific target environment.

For web application testing, AI can generate:

- Polymorphic payloads that bypass signature-based detection

- Context-aware injection strings that adapt to different frameworks

- Realistic social engineering content for phishing simulations

- Custom exploit code for newly discovered vulnerabilities

The key advantage? These AI-generated payloads are unique to each test, making them harder for security controls to flag while providing more realistic attack simulations. Automated code generation has seen notable improvements as discussed in IEEE's cybersecurity AI analysis.

If container security is on your agenda, our container image scanning leverages automated analysis-ensuring both speed and relevance in your pentests.

Intelligent Reconnaissance

AI supercharges the information gathering phase by automatically correlating data from multiple sources. It can process social media profiles, GitHub repositories, job postings, and public records to build comprehensive target profiles in minutes rather than hours.

Advanced reconnaissance features in platforms like Aikido’s surface monitoring help teams swiftly discover shadow IT assets and analyze exposed services-an essential practice given that OSINT-driven breaches are surging.

This automated intelligence gathering frees up human testers to focus on exploitation and attack chain development. For practical applications, you can see how this works in our guide, What Is AI Penetration Testing? A Guide to Autonomous Security Testing.

Report Generation That Actually Communicates

Perhaps AI’s most immediately valuable contribution is transforming how security findings get communicated. Instead of technical reports that gather dust, AI generates multiple report formats tailored to different audiences.

For executives, AI creates:

- Executive summaries focusing on business risk and financial impact

- Compliance mappings showing how findings relate to regulatory requirements

- Risk trend analysis comparing current results to previous assessments

For development teams, AI provides:

- Code-specific remediation guidance with exact line numbers and fixes

- Framework-specific recommendations tailored to your technology stack

- Priority rankings based on actual exploitability and business context

This multi-audience approach ensures security findings actually get addressed instead of ignored. Automated workflows can also be integrated via CI/CD pipeline security for faster, actionable responses.

Where AI Still Struggles

Complex Business Logic Flaws

AI excels at identifying technical vulnerabilities but often misses security issues rooted in business logic. Consider a multi-step approval workflow where an attacker can bypass certain steps by manipulating the application state. This type of vulnerability requires understanding the intended business process, and current AI systems still have blind spots, as highlighted in NSA's application security recommendations.

Real-world examples include:

- Approval bypass vulnerabilities in financial applications

- Race conditions in concurrent transaction processing

- State manipulation attacks in multi-step processes

- Authorization flaws in complex role-based systems

For an in-depth look at scenarios where manual intervention is critical, see Manual vs. Automated Pentesting: When Do You Need AI?.

Creative Attack Chain Development

While AI can identify individual vulnerabilities, it struggles with creative attack chaining-combining multiple minor issues into a devastating exploit path.

A skilled penetration tester might combine:

- An information disclosure vulnerability to gather user data

- A timing attack to enumerate valid usernames

- A password reset flaw to gain unauthorized access

- A privilege escalation bug to achieve admin rights

This kind of logic and creativity is something you’ll see explored in Best Pentesting Tools, where manual and AI-powered methods go head to head.

Environmental Context and Risk Assessment

AI tools often struggle with understanding the true risk of a vulnerability within your specific environment. For example, some AI systems may flag a SQL injection as critical when it only affects a read-only development database. According to Deloitte's AI security report, managing these nuances requires domain expertise.

Effective risk assessment requires understanding:

- Network topology and segmentation

- Data sensitivity and classification

- Existing security controls and their effectiveness

- Business criticality of affected systems

For broader coverage, consider integrating cloud posture management solutions that contextualize risk according to dynamic cloud architectures.

False Positive Management

Despite impressive advances, AI systems still generate false positives that can overwhelm security teams. Common issues include:

- Misidentifying secure code patterns as vulnerabilities

- Generating non-functional exploits that appear valid

- Over-flagging low-risk configurations as critical issues

- Missing context clues that indicate safe implementations

Addressing these requires mature validation frameworks, as highlighted in the SANS Institute’s research on false positives, and consistent human review.

Practical AI Implementation Strategies

Start with High-Volume, Low-Risk Tasks

Begin your AI adoption journey by automating time-consuming but straightforward tasks:

- Vulnerability scanning of large application portfolios

- Dependency analysis for open-source components

- Configuration reviews across cloud environments

- Initial reconnaissance and asset discovery

If your security needs involve open source dependencies, our open source dependency scanning solution fits seamlessly into this phase, letting you scale automated coverage with confidence.

Maintain Human Oversight for Critical Decisions

Never fully automate security decisions without human validation. Establish clear workflows where AI handles initial analysis and humans make final determinations-especially when chaining vulnerabilities or judging business impact. Strategies for this hybrid approach are further outlined in Best Automated Pentesting Tools.

Choose Tools with Strong Integration Capabilities

The most effective AI pentesting tools integrate seamlessly with existing security workflows. Look for solutions that connect with:

- Ticketing systems for automated vulnerability assignment

- CI/CD pipelines for continuous security testing

- SIEM platforms for centralized logging and correlation

- Communication tools for real-time security alerts

Comprehensive platforms, such as Aikido Security’s ASPM solution, centralize security data and keep automated findings actionable.

The Future of AI-Powered Penetration Testing

The next wave of AI innovation in security testing will likely focus on three key areas:

Predictive Vulnerability Analysis

Soon, AI tools will be able to predict vulnerabilities before they're introduced, by analyzing not just code but also architecture and developer behavior-an evolution that aligns with NIST's proactive security guidelines.

Automated Attack Simulation

Advanced AI will simulate sophisticated multi-stage attacks automatically, testing not just individual vulnerabilities but complex attack scenarios. For developments in automated red teaming, watch for new research from ISACA and leading academic groups.

Adaptive Defense Testing

AI systems will continuously adapt their testing strategies based on defensive responses, creating an ongoing cat-and-mouse game that more accurately reflects real-world threat scenarios.

Building Your AI-Enhanced Security Program

The most successful security programs combine AI efficiency with human expertise strategically. Here’s a practical framework:

Layer 1: AI Foundation

Deploy AI for continuous monitoring, routine scanning, and initial triage across your entire digital estate.

Layer 2: Human Intelligence

Use skilled testers for creative exploitation, business logic testing, and complex risk assessment.

Layer 3: Hybrid Validation

Implement processes where AI findings are validated and prioritized by human experts before remediation.

This layered approach maximizes coverage while maintaining the quality and context that effective security requires.

Making AI Work for Your Security Team

Generative AI represents a powerful force multiplier for penetration testing, but it's not a replacement for human expertise. The organizations seeing the biggest security improvements are those that thoughtfully combine AI automation with skilled human analysts.

The key is understanding exactly what AI can and can't do today, then building processes that leverage its strengths while compensating for its weaknesses. Used correctly, AI doesn't just make penetration testing faster-it makes it smarter, more comprehensive, and ultimately more effective at protecting your organization.

Start small, validate carefully, and scale strategically. The future of security testing isn't AI versus humans-it's AI empowering humans to be more effective than ever before.

For further exploration on autonomous approaches to penetration testing, see our guide on What Is AI Penetration Testing? and explore continuous innovation in Continuous Pentesting in CI/CD.