Crying Wolf in Cybersecurity

The boy who cried wolf goes back to a fable where a shepherd boy mocked the other villagers by telling them that a wolf was attacking the flock. The villagers believed him at first, but he was just laughing with them. When the shepherd boy repeated his joke, villagers started to ignore him and at some point a real wolf comes and attacks the sheep. The boy ‘cried wolf’ but nobody believed him anymore.

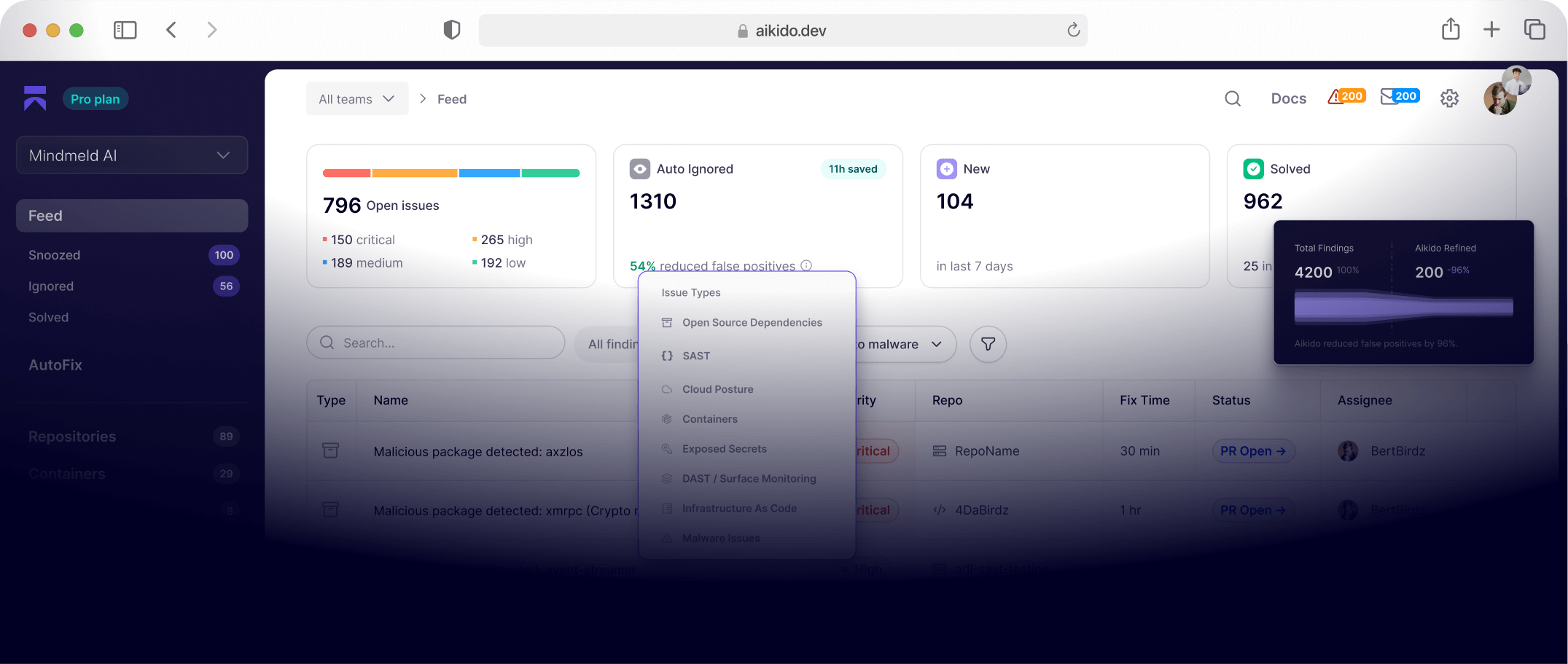

Cybersecurity tools have acted as shepherd boys: they tend to give a lot of false alarms, which fatigues developers to pay attention to them. It causes developers to lose time and to lose trust in the tools. In order to work efficiently and effectively in cybersecurity, you need a good filter to avoid those false positives. That is exactly what AutoTriage does for SAST vulnerabilities.

True Positive Example

The following is an example of a SAST finding. SAST stands for Static Application Security Testing, freely translated as: detect dangerous patterns in source code, without running the code. It is a powerful method to flag many different kinds of vulnerabilities.

In this example, we see AutoTriage marking a sample as ‘very high priority to fix’. The SAST finding points to potential NoSQL vulnerability. The code represents a login endpoint where users can provide a name and a password. There is a call to the database to search for a matching record for both name and password.

The problem here is that NoSQL allows you to insert objects like { $ne: undefined }. In that case, the match will be based on anything that is different from undefined. Imagine that an attacker would upload something like this:

{

name: LeoIVX,

password: { $ne: undefined }

}In that case, the attacker would be able to log in as the pope (if the pope would have an account with that username on that software platform), since the password would always match the query.

In this case the SAST finding was an actual true positive. AutoTriage does more than just confirming here: it also boosts the priority, since this vulnerability is easier to exploit and has a higher severity than the average SAST finding.

When an issue like this is reported, you should fix it ASAP. There is no faster method than using Aikido’s AutoFix tool. This will create a pull request (or merge request) with one click. In this case the result is:

AutoFix will always suggest the simplest fix that adequately solves the vulnerability. In this case casting both the name and the password suffices to secure the endpoint and align with the developer’s intent.

Please bear in mind that passwords should never be compared directly and password hashes should be used instead - this example was used for the sake of simplicity. The LLM used by AutoFix is explicitly instructed not to fix any other issues than the reported vulnerability, so pull requests attain the best practice of solving one problem at a time.

False Positive Example

As previously mentioned, the real problem of SAST tools is the number of false alarms they produce. One example of this can be found below. There is a potential SQL injection where a ‘productName’ gets injected into an SQL query. Moreover, this ‘productName’ comes from the request body, so it’s user-controlled. Fortunately, there is an allowlist that checks if productName is either “iPhone15”, “Galaxy S24”, “MacBook Pro” or “ThinkPad X1”. This guarantees that productName cannot contain an attack payload like productName = “iPhone15’; DROP TABLE products; - - ”.

An allowlist like the one given in this example is an effective countermeasure against SQL injection. But legacy scanners like Semgrep fail to assess the effectiveness of such allowlists.

Large Language Models (LLMs) provide a big opportunity here: they can understand much more context of source code and filter out samples like this.

Aikido’s “No Bullsh*t Security” Narrative

When software companies look for AppSec providers, they often compare different solutions available in the market. One typical way of how the less experienced companies compare vendors is by counting the number of vulnerabilities found in their source code. It will not be a surprise that they tend to believe that more vulnerabilities equals better tooling. Sometimes they choose their vendor based on this poor assessment. Consequently, some AppSec companies are hesitant to filter out false positives, since they would perform lower on this often seen comparison.

At Aikido, we take a different approach. Our “No Bullsh*t” narrative means that we want to help customers as much as possible, also when this means a few lost deals in the short run. AI AutoTriage is a clear example of this, since this feature offsets Aikido’s offering from others in the market.

Availability

We enabled this feature for 91 SAST rules across different languages, including javascript/ typescript, python, java, .NET and php. More rules are being added at a fast pace.

This feature is enabled for everyone, including free accounts. That said, free accounts may hit the maximum number of LLM calls quite easily.

CI Gating

CI gating is the process where Aikido scans for vulnerabilities on each pull request. AI AutoTriage is now also enabled for this feature, which makes the workflow much more convenient.

Imagine that you introduced a path traversal vulnerability in a pull request and applied an AutoFix. That fix would typically use a denylist of patterns before reading or writing the file. Since denylists are hard to interpret with hardcoded patterns, even the fixed version would still be flagged as an issue. This is now resolved thanks to the application of our AutoTriage directly in the CI pipeline.

Conclusion

We released a powerful feature for filtering out false positive SAST finding and also help with prioritization of the true positive samples. It is available for everyone to test, even for free accounts. This feature is a major step forwards in reducing the “Cry Wolf” effect in cybersecurity, helping developers focus on what really matters: resolving real vulnerabilities and more time for building features for their customers.