Code reviews are foundational for delivering secure,reliable software-and their value is amplified when done right. But it’sshockingly easy to slide into habits that hamper efficiency and undermine codequality. Drawing on real-world data and community best practices, let’s breakdown the most common code review mistakes and how to solve them.

For more on balancing speed and quality, check out Continuous Code Quality in CI/CD Pipelines and compare review strategies in Manual vs. Automated Code Review: When to Use Each.

Common Code Review Mistakes and How to Fix Them

#1. Not Having Clear Review Standards

Without clear review criteria, feedback is inconsistent and often subjective. The Software Engineering Institute found standard checklists reduce errors andspeed up the review process.

How to Fix It:

- Create a concise review checklist focused on logic, maintainability, and security.

- Lean on established language style guides to set formatting expectations.

- Reference Common Code Review Mistakes (and How to Avoid Them) to keep teams aligned.

#2. Focusing Too Much on Style

It’s tempting to use reviews to check tabs and variablenames. But researchpublished by IEEE shows this focus often distracts from deeper issues, likesecurity or logic bugs.

How to Fix It:

- Use automated linters (like ESLint or Prettier) for formatting.

- Keep human review time focused on functionality, security, and architecture.

- Explore how automation improves reviews in Using AI for Code Review: What It Can (and Can’t) Do Today.

#3. Missing Security Vulnerabilities

Security issues can be subtle. A recent Verizon report found that over 80% of breaches could be traced to overlooked code vulnerabilities.

How to Fix It:

- Train teams on common risks-consider resources like the OWASP Top 10.

- Use automated tools such as Aikido Security's Secrets Detection and SAST to catch hidden flaws early.

- For tips on integrating security into reviews, read AI Code Review & Automated Code Review: The Complete Guide.

#4. Conducting Reviews Too Late

Reviewing only at the post-merge or pre-deployment phase multiplies the cost and pain of fixing mistakes. IBM’s Cost of a Data Breach report highlights how early intervention reduces fix costs by up to 15x.

How to Fix It:

- Begin reviews during pre-commit or pre-merge for faster feedback and fewer issues.

- Encourage frequent, smaller pull requests for manageable review cycles.

#5. Overwhelming Teams with Noise

Developer focus suffers when every minor warning becomes an alert. Forrester notes that alert fatigue severely reduces response rates to critical issues.

How to Fix It:

- Use tools (like Aikido Security) that prioritize meaningful issues and suppress low-value noise.

- Adjust alert thresholds and educate teams on triaging warnings effectively.

#6. Skipping Mentorship and Learning

Reviews are high-value teaching moments, but rapid or adversarial critiques shut down collaboration. Studies by the Linux Foundation emphasize the value of code review for ongoing developer learning.

How to Fix It:

- Provide constructive, clear feedback-especially for junior devs.

- Use actionable review comments to build shared understanding and upskill the team.

#7. Reviewing Massive Pull Requests

Large PRs overwhelm reviewers, making it easy to overlook problems and increasing the chance of bottlenecks. GitHub’s analysis shows that smaller PRs get higher-quality, faster feedback.

How to Fix It:

- Set maximum PR size guidelines (e.g., <400 lines of code).

- Scope each PR to a clear, single purpose.

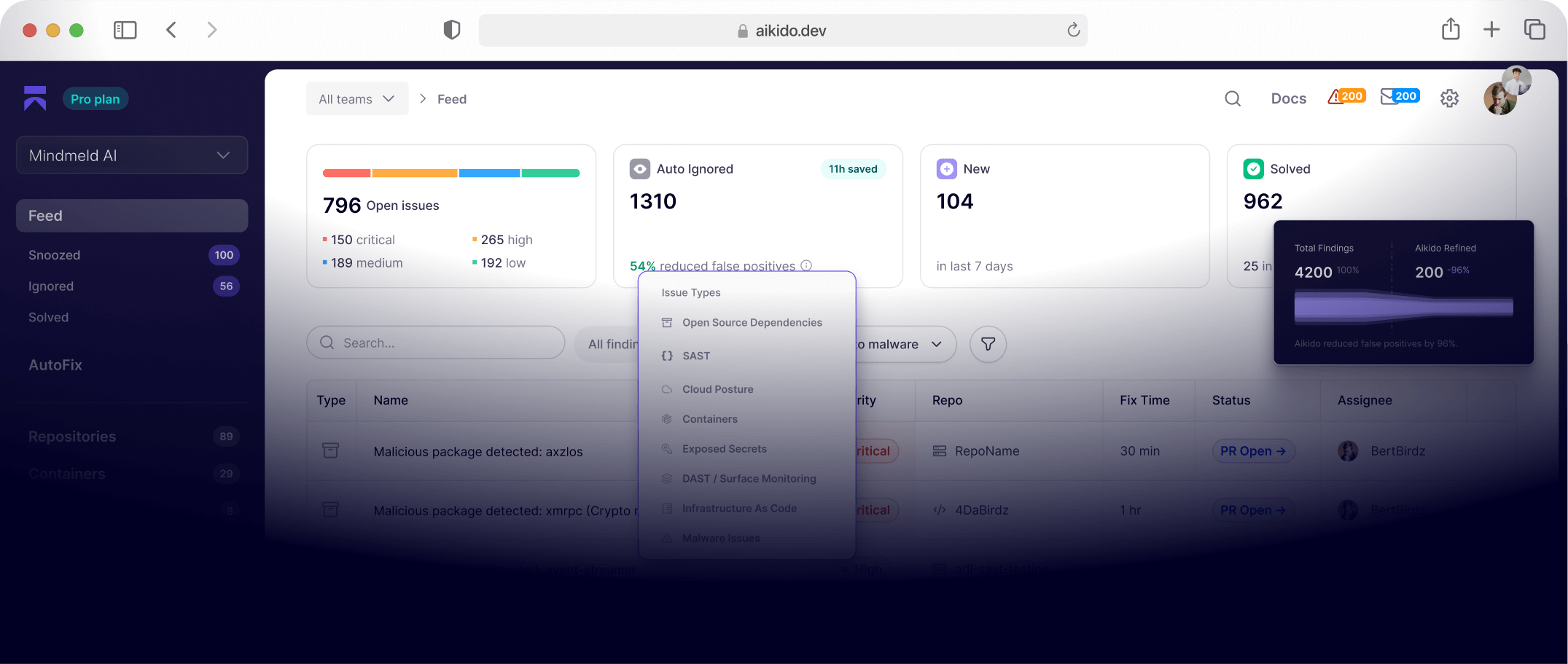

How Aikido Security Elevates Code Reviews

Aikido Security tackles precisely these pain points through its developer-first code review platform:

- Noise Reduction: Eliminates irrelevant alerts and false positives, so devs focus on fixes that matter.

- Actionable Insights: With features like Open Source Dependency Scanning and Cloud Posture Management CSPM, teams get clear steps to remediate vulnerabilities.

- Seamless Integration: Works with your CI/CD ecosystem, from GitHub and GitLab to supporting compliance needs out of the box. For a practical workflow comparison, see Manual vs. Automated Code Review: When to Use Each.

- Automated Compliance Checks: Generates audit-ready reports for standards like SOC 2 and GDPR, reducing manual effort and risk.

Final Thoughts

Code reviews can be a springboard for developer growth-or a source of tech debt and frustration. By addressing these common mistakes and embracing tools purpose-built for clarity (like Aikido Security), your teams can reclaim review quality and efficiency.

Want more practical strategies? Read Best Code Review Tools for top picks, or check out Continuous Code Quality in CI/CD Pipelines for actionable CI/CD guidance.

Take the next step toward confident, productive code reviews. Try Aikido Security today.