At a Glance

- Identified a number of high-severity and low severity issues in a complex financial platform

- Found real vulnerabilities in ~2 hours after a 120-hour manual pentest reported zero findings

- Even with Aikido’s narrowest coverage (20 attacker agents), issues were uncovered that penetration testers did not find

- Uncovered issues rooted in custom application logic, authorization design, misconfigurations, hardening and encryption algorithms.

- Validated tenant isolation, role enforcement, and protection of privileged functionality

- Grouped related findings to help teams fix root causes rather than individual symptoms

Challenge

Cope is a Swedish digital finance and operations consultancy that operates a complex financial platform with strict authorization and tenant isolation requirements. The system supports multiple tenant models and handles sensitive financial and operational data for its customers.

Because of this complexity, authorization flaws were a primary concern. Issues involving cross-tenant access, broken role enforcement, or unintended access to privileged functionality could have serious business and compliance impact.

To validate its security posture, Cope commissioned a manual penetration test spanning 120 hours. The final report came back with zero findings.

Rather than increasing confidence, the result raised concerns internally.

“When the manual pentest came back with zero findings, it didn’t actually increase our confidence. With an application this size and this much custom authorization logic, we were sure there had to be issues we just weren’t seeing,” said Cope CTO Alvar Markhester.

Cope wanted deeper assurance without relying solely on infrequent, time-boxed human testing.

Running the AI Pentest

Cope ran Aikido’s AI pentesting against their application with detailed knowledge of the platform’s structure, APIs, and authorization model via the white-box option using repo access.

In this instance, Cope used Aikido’s narrowest coverage of 20 attacker agents.

Given the size of the system and the amount of custom logic involved, understanding how authorization was intended to work was critical.

“Because the application lives in a large monorepo with a lot of custom logic, having a tool that could actually understand the context of how authorization is supposed to work made a big difference.”

The team described their tenant models, role-based access controls, and privileged functionality that should never be exposed.

The objective was clear. Ensure users could not access data or functionality outside their intended scope, even through edge cases and complex logic paths.

What the AI Pentest Found

Despite the earlier manual pentest reporting zero findings, Aikido’s AI pentest uncovered multiple valid authorization issues.

This included a few high-severity issues with meaningful security and business impact, as well as additional lower-priority issues that highlighted weaker or inconsistent enforcement.

To help the engineering team act efficiently, Aikido grouped related findings together. This allowed teams to address entire classes of problems rather than fixing individual issues in isolation.

The findings were not superficial.

The AI pentest uncovered multiple high-severity authorization flaws across the application. These issues affected privileged actions, shared resources, and tenant boundaries, and were rooted in custom application logic rather than simple misconfiguration. All of them were missed by the 120-hour manual pentest.

“The findings weren’t obvious issues, they were complex, so we were super impressed by Aikido Attack”, said Alvar.

The issues spanned different parts of the application and stemmed from custom application logic and legacy paths rather than misconfiguration. All of them had been missed by the 120-hour manual pentest.

“There were more findings than a manual pentest in this case.”

This was despite using Aikido’s narrowest coverage, and short time of conducting the test - just over two hours.

While none were criticals, the issues represented meaningful authorization weaknesses that could have led to data exposure or privilege misuse if left unresolved. All identified issues were addressed.

Cope has since re-tested all these issues more than once and had confirmation that they were mitigated.

Why the Manual Pentest Missed Vulnerabilities

The external pentesters used standard tooling and invested significant time, yet still failed to surface the issues.

From Cope’s perspective, the limitation was not effort or expertise. It was scale.

“It’s a large application, so even small paths in the codebase can be hard to reach within a fixed-time engagement.”

Unlike humans constrained by time and predefined scope, the AI agents were able to continue exploring authorization paths, follow logic across services, and revisit edge cases without fatigue.

“These are issues a human tester could eventually find, but not within a fixed-time engagement. The agents can keep exploring paths and assumptions long after a traditional pentest would have to stop.”

When Cope shared the AI findings with the firm that performed the manual pentest, the outcome was clear.

“When we shared the results with the firm that ran the manual pentest, it was clear that the AI pentest had uncovered real issues that simply hadn’t surfaced during the manual pentesting engagement.”

How Cope is Expanding its Use of Aikido Security

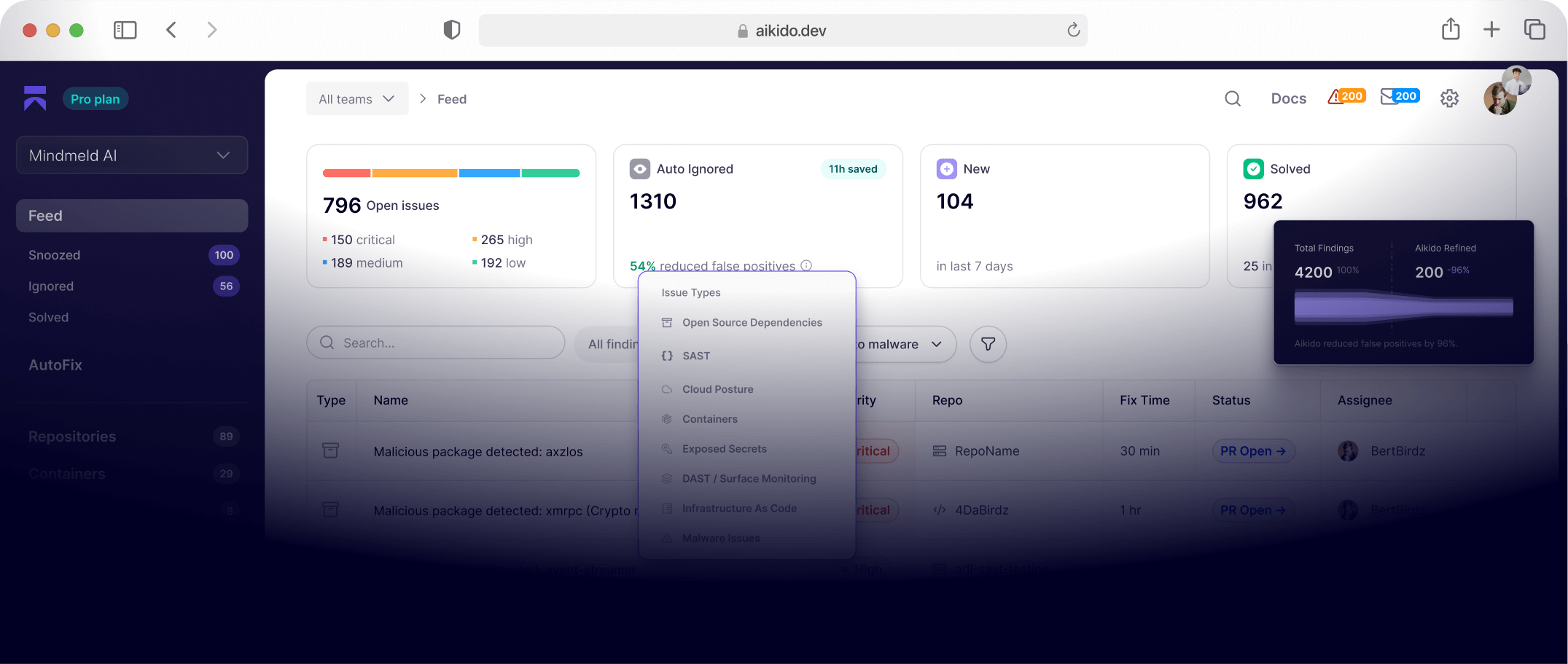

Already using

- AI Pentesting & Attack Surface / DAST (Aikido Attack)

- Aikido Code

- Aikido Zen Firewall (Aikido Protect)

Planning to expand adoption

Evaluating next

- Alvar is interested in using a continuous pentesting solution in the future.

Final verdict

For Cope, AI pentesting was not about replacing human testers. It was about depth, persistence, and context.

“The agents could quite easily understand the context of what the application is about because they have all the information,” he said.

Aikido Attack provided a level of assurance that scales with application complexity and moves beyond traditional point-in-time testing.

“The biggest difference was depth. Aikido didn’t just test endpoints. It understood how our authorization model was supposed to work and kept exploring until it found real gaps that a traditional pentest had missed.”