Dear reader,

This week has been a strange one. Over the past few months, we’ve seen a string of significant supply chain attacks. The community has been sounding the alarm for a while, and the truth is we’ve been skating on thin ice. It feels inevitable that something bigger, something worse, is coming.

In this post, I want to share some of the key takeaways from this week. I had the chance to sit down with Josh Junon, better known as Qix, and interview him about his experience in the middle of this ordeal. Josh is the maintainer who was compromised in the phishing attack, and he is also behind some of the most widely used packages in the npm ecosystem.

Before diving into that conversation (See the interview below), let’s take a quick look back at the events that brought us here.

Prologue - Impact

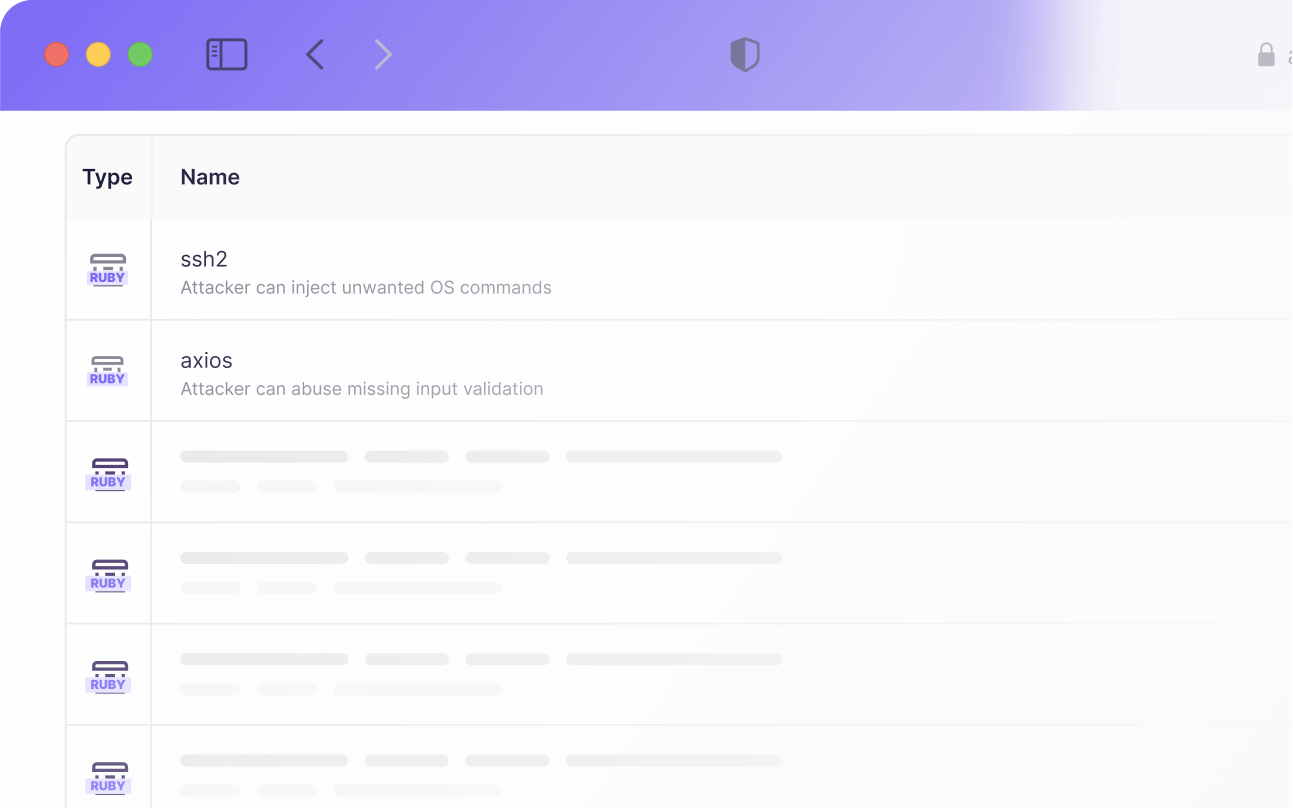

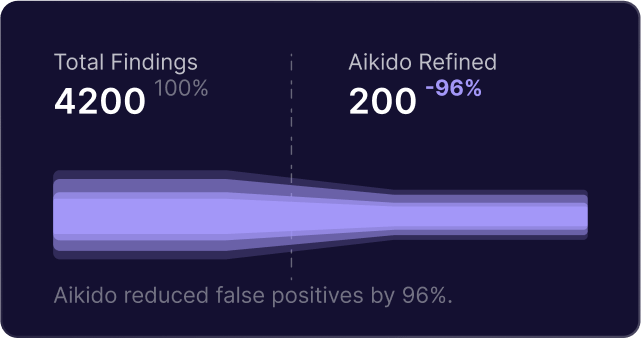

On Monday, when I first saw the alerts in our internal Aikido Intel dashboard, it was immediately clear that this could be one of those worst-case scenarios that keeps me up at night. Major packages like debug, chalk, and sixteen others had been compromised with malware. The potential fallout was nothing short of catastrophic.

The numbers speak for themselves:

- Collectively, the compromised packages are downloaded around 2.6 billion times every week.

- JFrog estimates that the malicious versions alone were downloaded roughly 2.6 million times.

- Wiz reported that 99% of their cloud environments rely on at least one of these packages, and in 10% of cases, the malicious code was present.

By any measure, this was a close call. As many have noted since, we narrowly dodged a bullet. The only reason the damage wasn’t far worse comes down to two factors:

- The attackers were laser-focused on stealing cryptocurrency.

- They went to great lengths to remain undetected.

But history reminds us how fragile that luck can be. We only need to look back to August 26, 2025, to see what happens when an attacker is less careful in their execution.

Prologue - Nx compromise

On August 26th, the Nx package manager was compromised, but the attackers chose a very different playbook:

- They exfiltrated secrets and tokens from developer machines and dumped them publicly on GitHub.

- They then used those tokens to break into GitHub accounts, flipping private repositories into public view.

It was a classic smash-and-grab. A blunt reminder that compromising a widely used package doesn’t just create inconvenience. It can cause real, lasting damage. In many ways, it was a warning shot, showing just how exposed we are.

The conversation with Josh

After reaching out to Josh to alert him about the compromise and continuing our conversations afterward, my colleague Mackenzie and I realized it would be fascinating to sit down with him and hear firsthand what this experience has been like. Fortunately, Josh was open to it.

What followed was a thoughtful and candid conversation that left a real impression on me. I’d like to share some of my biggest takeaways with you here. If you’d like the full context, you can watch the entire 45-minute discussion here:

Insight: Trust Through Vulnerability

Security is usually about eliminating vulnerability. But in open source, trust is only possible because of vulnerability. Maintainers put their code, and a part of themselves, out into the world, exposing it to billions of downloads and countless users. That exposure requires a leap of faith.

When Josh spoke openly about how he was phished, he showed the kind of vulnerability that actually strengthens trust. By admitting mistakes, being transparent, and staying engaged with the community, he reminded us that open source isn’t run by faceless corporations but by people. And trust in those people is what makes the whole system work.

In the end, the paradox is that security seeks to remove vulnerability, but trust in open source is built on it.

I think what made a huge difference is the sort of general response. I know it was pointed out quite a few times that the vulnerability was appreciated. And I think I knew that beforehand. I've had a few cases in open source where I've messed up myself, not security wise, but just being transparent and honest - and immediately really saved a lot of people headaches, time and money. And it's always appreciated.

Insight: Falling Into Open Source

Josh never set out to become a pillar of the JavaScript ecosystem. He began coding as a teenager, experimenting with PHP, C#, and JavaScript, and open source was just a place to share projects that interested him. He was drawn to things like adding colors to terminals or building small utilities, never imagining they would end up at the core of modern software.

Over time, his contributions led to bigger responsibilities. For some libraries, like chalk, he was invited to join as a maintainer after contributing fixes. For others, such as debug, he inherited stewardship from earlier maintainers. What started as tinkering for fun gradually grew into maintaining some of the most widely used packages in the ecosystem.

Years later, Josh found himself responsible for billions of weekly downloads. What had once been small side projects had become critical internet infrastructure. Not through careful planning or ambition, but simply by being in the right place at the right time, and saying “yes” when asked to help.

They started getting used in more and more places, probably even some where they never should have been. Honestly, a few of those packages shouldn’t exist at all, and I’ll be the first to admit it. Then one day you wake up and realize that a tiny utility you wrote to move array values around is used by 55,000 people and downloaded 300 million times a week. You never asked for that responsibility.

Insight: The Powerlessness of Losing Control

Perhaps the hardest part of the incident for Josh wasn’t realizing he’d been phished. It was the helplessness that followed. Once the attackers had his password and 2FA code, they were essentially him in npm’s eyes. They reset his 2FA tokens, changed authentication details, and locked him out of his own account. Even basic actions like resetting his password or recovering access didn’t work.

For nearly 12 hours, all Josh could do was watch from the outside while malicious versions of his packages had spread across the internet. He had no way to intervene directly, no button to push, no fast channel to npm support. Instead, he was forced to rely on public help forums and wait.

The feeling of powerlessness is what stuck with him. As he put it, there was no transparency, no tools for a maintainer in crisis to take back control. In the middle of one of the biggest supply chain compromises in recent memory, the person most trusted with those packages was left sitting on the sidelines.

The password reset button didn’t work. I got zero emails throughout this entire thing. Password reset did nothing. So even if the email was still the same on the account, which I still kind of believe it was, reset password wasn’t working… There was no recourse for it other than to contact npm through their public non-authenticated help form.

Insight: A Bullet Dodged

The malware planted in chalk, debug, and the other compromised packages was serious, but its scope was strangely narrow. It attempted to steal cryptocurrency by injecting itself into browser contexts. That decision by the attackers, whether from greed or shortsightedness, is the only reason the fallout wasn’t far worse.

As Josh put it, this could easily have been catastrophic. Those packages run everywhere: laptops, enterprise servers, CI/CD pipelines, and cloud environments. They could have been exfiltrating AWS keys, leaking secrets, or encrypting files for ransom. Instead, the attackers limited themselves to one narrow goal.

It could have done anything and it was being run… on enterprise machines, personal machines, small businesses, CI/CD pipelines. And the fact it didn’t do anything [worse] was the bullet we dodged.

The lesson isn’t that the system worked. It’s that luck was on our side this time. Treating it as a “nothing burger” misses the point. The next attacker might not be so restrained.

Insight: The Human Cost of Open Source

Behind every widely used package is a human being, often working quietly and without fanfare. For Josh, the most challenging part wasn’t just the technical compromise. It was the emotional toll that followed. He described the first day as pure autopilot, scrambling to contain the damage. Only afterward did the weight hit him: embarrassment, anxiety, and the unsettling thought that his mistake could have hurt people.

That was Monday. Once I got my account back that afternoon or that evening, it was like, okay, I can flip the switch off and now I can just lay in bed and stare at the ceiling and think about what just happened… The next day was just embarrassment. I didn’t want to show my face. I didn’t want to go outside.

This is the hidden reality of open source. Small projects created “for fun” become critical infrastructure, carrying billions of downloads. Maintainers never asked for that responsibility, yet they bear the stress when things go wrong. The community sees the code, but often overlooks the human cost of maintaining it.

Josh’s experience is a reminder that ecosystem security isn’t just about stronger MFA or faster incident response. It’s also about supporting the maintainers who shoulder this responsibility — respecting boundaries, showing empathy, and recognizing that trust depends on the people behind the code.

Call to Action

This incident highlighted how a single compromise can ripple through the software supply chain. It wasn’t catastrophic this time, but it could have been easily. The lesson isn’t to panic, but to act.

- For registries (npm, PyPI, etc.): Enforce phishing-resistant MFA for high-impact maintainers and provide clear, rapid-response channels when accounts are compromised.

- For organizations: Improve dependency hygiene. Pin versions, monitor for tampering, and integrate supply chain security checks into CI/CD pipelines.

For maintainers: Use hardware keys, avoid quick security decisions made under stress, and lean on transparency if something goes wrong. - For the community: Support the humans behind open source. Respect their boundaries, provide resources where possible, and respond with empathy when mistakes happen.

The real call to action is simple: treat ecosystem security as shared responsibility. Platforms, companies, and communities all have a role to play in making sure the next incident doesn’t escalate into something worse.

Epilogue

It has been a surreal week, being in the eye of a storm that drew so much press and attention, and for good reason. Watching events unfold in real time, from the first alerts in our dashboard to the flood of public reports, felt like standing on a fault line as the ground shifted beneath us. This is a reminder how fragile our ecosystem is.

What unfolded came uncomfortably close to the kind of worst-case scenario I spend my days worrying about in open source security. But the thing I fear most isn’t the phishing email, the malware, or even the headlines. It’s that we let this moment pass without change.

If the industry shrugs, treats this as just another incident, and slips back into business as usual, we will have learned nothing. And the next time, because there will be a next time, we may not be so lucky. The first time, shame on the attackers. The second time, shame on us.

And if we don’t take steps to learn from this, the quiet erosion of trust in open source may turn out to be the most damaging consequence of all.

Secure your software now

.avif)