AutoTriage and the Swiss Cheese Model of Security Noise Reduction

Or, why your traditional scanners probably report too much

The Swiss‑cheese model is a classic way to reason about risk. In safety-critical industries, it posits that incidents happen when “holes” across multiple imperfect defenses line up. To mitigate these risks, you add defense layers to shrink or remove the holes, and and prevent bad outcomes from slipping through.

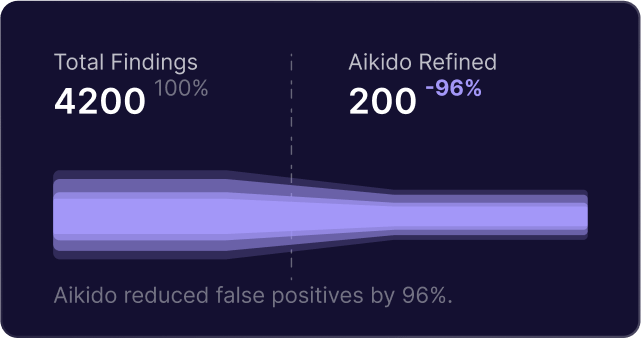

In application security, incidents are caused not just by code and configuration exploits, but by an erosion of engineering focus caused by false positives and slow remediation. Vulnerability management is equally a engineering and process problem, and Aikido takes a strategic approach to reduce noise and accelerate the fixes that matter.

The importance of layered defense

We can visualize a modern AppSec workflow as including a set of stacked “slices,” each designed to catch different types of problems and shrink the overall risk surface. These include:

- Code scanning and reachability

- Issue triage to assess exploitability, priority, and impact

- Exploit likelihood and its environment context

- Human-in-the-loop controls (local IDEs, CI systems, PR approval flows)

- The actual fix to the code using humans and LLMs

Each of the above can represent both an exploitable weak point and a bottleneck to remediation. Aikido’s workflows are therefore opinionated toward relentlessly reducing friction so that actual vulnerabilities are remediated where they are most exploitable.

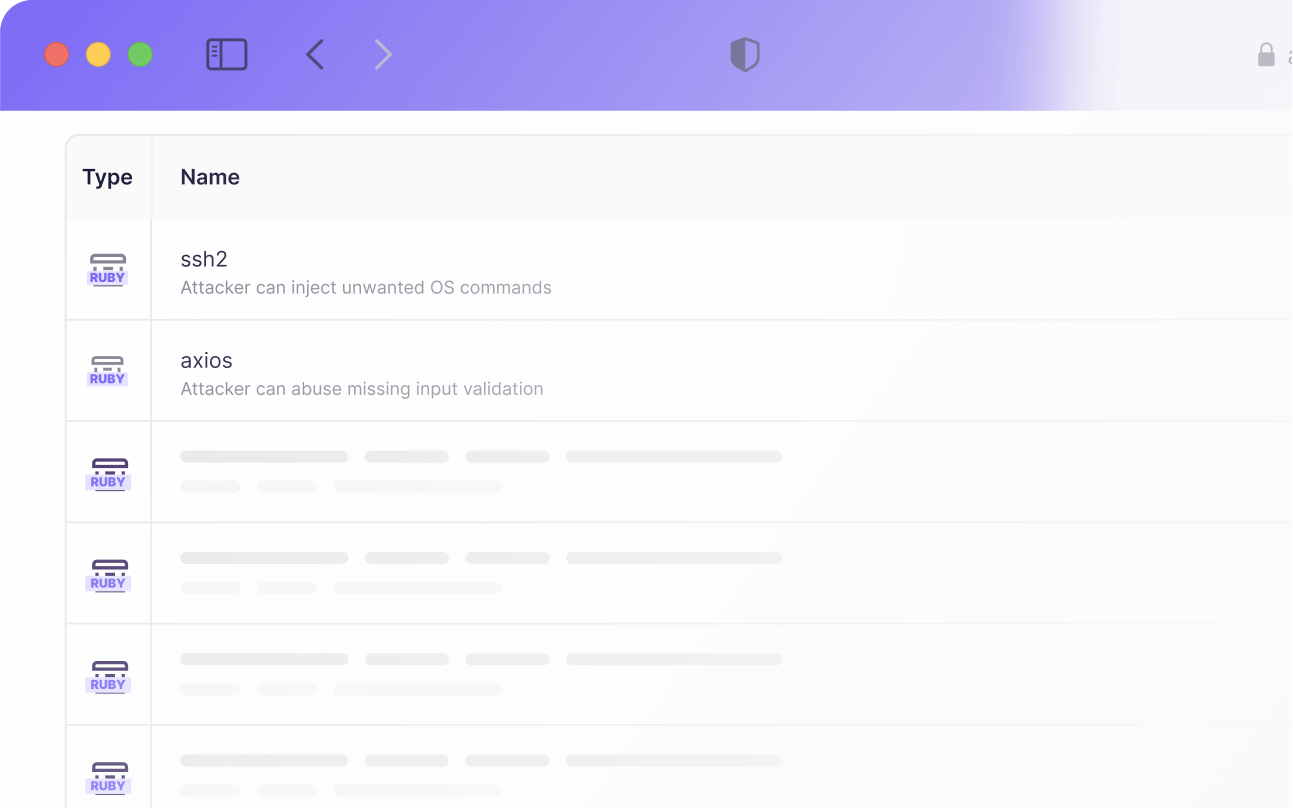

Shrink holes with reachability

A weak point of traditional code scanning is that “success” can be misunderstood as finding as many issues as possible. These include findings reported as vulnerabilities even when the vulnerable code path or package can’t be reached from user‑controlled inputs. Aikido’s reachability engine adds program‑flow analysis to auto-ignore such cases up front front. This alone cuts a significant chunk of noise.

For example, an traditional SCA scanner might catch an outdated version of pyyaml containing a critical vulnerability. However, reachability analysis shows there isn’t any code that actually uses the package. Therefore the issue can be safely auto-ignored despite its documented severity.

AutoTriage as a reasoning gate

If reachability determines that a finding is nominally exploitable, Aikido AutoTriage asks the following:

- Can we rule out exploitability? That is, is there effective validation, sanitization, or casting that mitigates the exploit.

- If we can’t rule it out, how should we prioritize it? We estimate likelihood and severity and then rank accordingly.

We’ve previously published how reasoning models help here. In many cases, “rules‑of‑thumb” are sufficient (and cheaper). For more complex cases (for example, path traversals), reasoning models reduce false positives further by decomposing the problem.

Underpinning all of this is an acute awareness of the Cry Wolf effect, where too many low‑value alerts degrade trust and response. Reducing it is a primary goal of AutoTriage.

Exploit likelihood and environment context

Real‑world exploit likelihood matters. Aikido uses EPSS to automatically ignore or downgrade low‑risk issues and focus teams where attacks are actually happening. Because EPSS is updated daily, this layer keeps the stack aligned with current threat reality rather than historic CVSS scores.

On top of that, we upgrade or downgrade severity based on context like internet exposure, dev vs. prod environments and surrounding controls. This is where context gathering is critical. If the context is wrong, the severity decision is wrong. It’s why we invest in prompt tuning our LLMs and in piping the right code and environment details into the models performing AutoTriage.

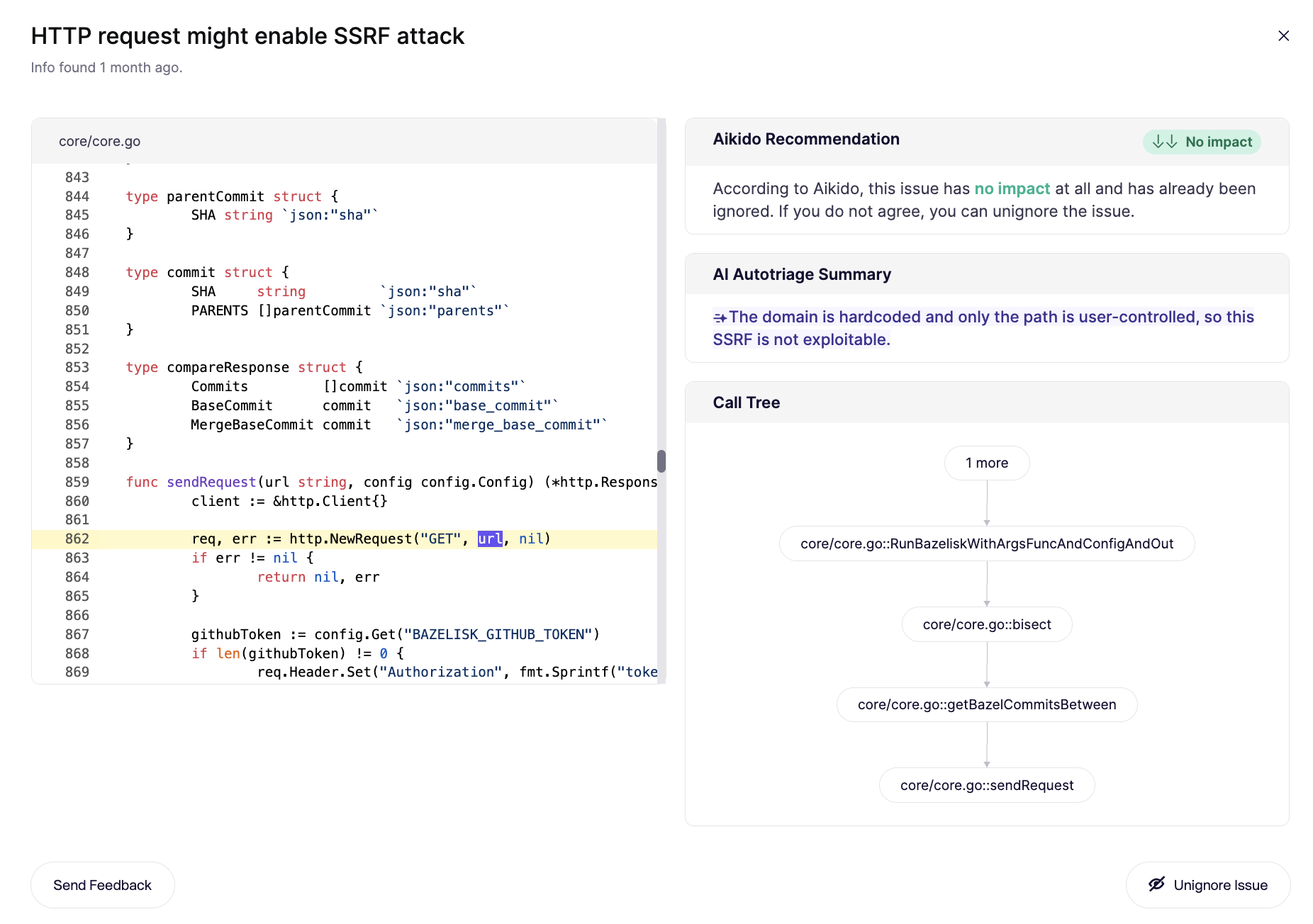

Reachability shows that this exploit is reachable in the code. However, AI analysis determines that in context this request forgery vulnerability has no affect because the host domain is hardcoded and can’t be changed by the attacker.

Human‑in‑the‑loop where it counts

Generative AI has a well-documented feedback loop problem. Humans are still needed to gut-check outputs and ultimately decide whether to ship. Aikido intentionally surfaces its recommendations in the places your team already works:

- In your IDE so you can make quick fixes before pushing to a shared repo and running CI pipelines

- In PR’s so reviewers see triage context and AutoFix suggestions right when code changes,

- In CI, so you can place security in the context of your existing automated build, test, and deploy pipelines

The goal is to reserve human time, effort, and judgement to the places where it’s truly needed.

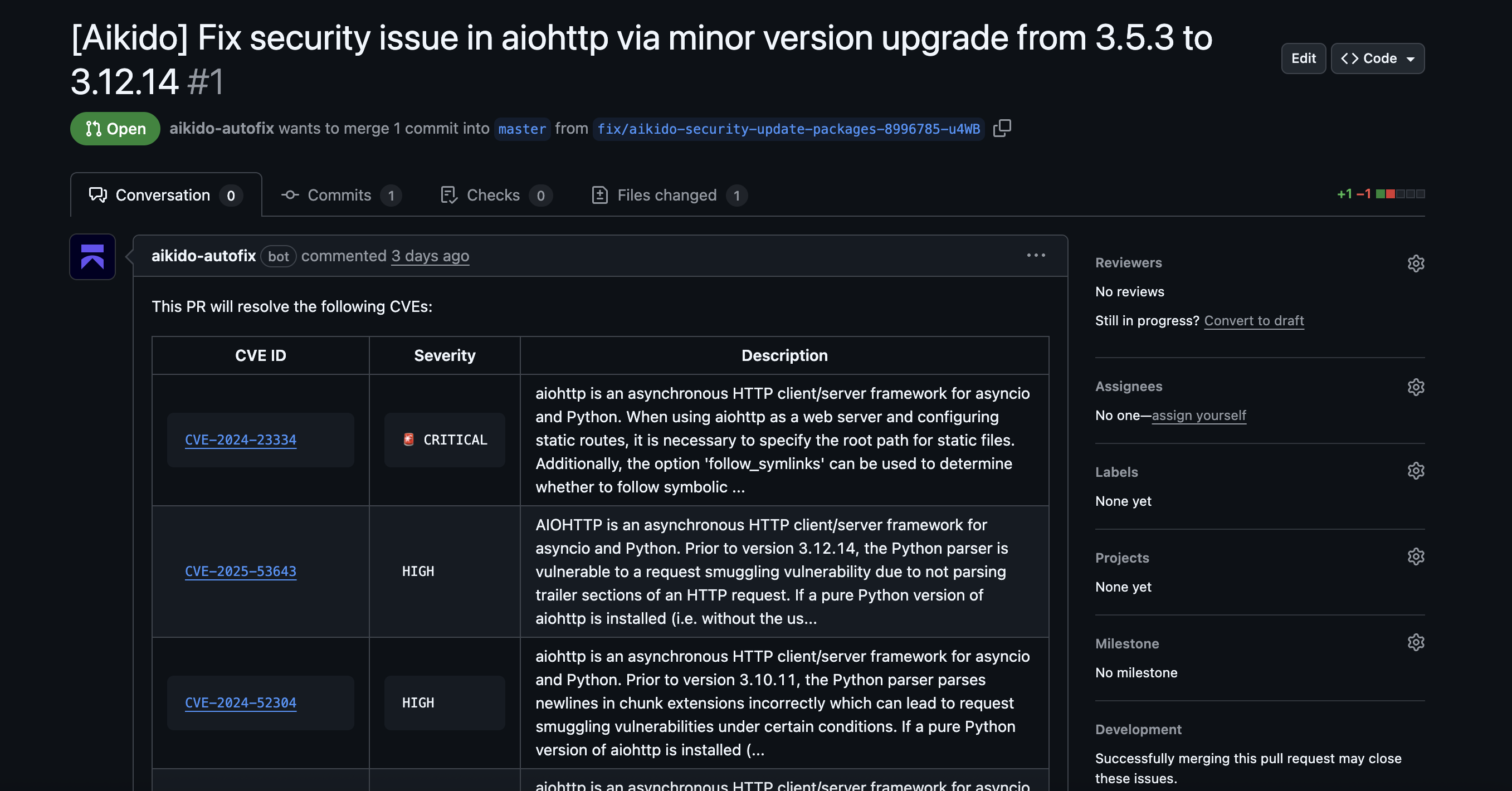

AutoFix to ship fixes immediately

If a finding is likely exploitable and high-impact, the best alert is one that includes a documented fix with the context already assembled by Aikido.. Aikido AI AutoFix provides a preview of the change needed to fix, generates the pull request for your repository, and provides detailed comments around the code change and which vulnerabilities are resolved.

AutoFix is currently available for SAST, IaC scanning, SCA, and container images. Your code is never persistently stored by an AI technology nor allowed to be used to train AI models (see Aikido’s trust center to learn more).

Putting Aikido to work

The original Swiss‑cheese model teaches that incidents emerge from aligned weaknesses. In application security, noise and delay are two of those weaknesses. If you’re new to Aikido, you initially may wonder why you see fewer security issues surfaced than you might expect. This is by design! Aikido intentionally and proactively works to minimize the administrative work you must perform to triage hundreds of issues where only some might have a meaningful impact.

By layering reachability, reasoning‑driven triage, human validation when warranted, and one‑click fixes, we make it much harder for those holes to line up, and much easier for your team to move on to the work that matters.

Secure your software now

.jpg)

.avif)