When is AI Pentesting Actually Safe to Run Against Real Systems?

If you feel uneasy about AI penetration testing, you’re not behind the curve. You’re probably ahead of it.

Security testing is one of the first areas where AI is no longer just helping humans, but acting on its own. Modern AI pentesting systems explore applications independently, execute real actions, and adapt based on what they see.

That is powerful. It also raises very real questions about control, safety, and trust.

This post is not about whether AI pentesting works. It’s about when it is actually safe to run.

Why Skepticism About AI Pentesting is Reasonable

Most security leaders we speak to are not anti AI. They are cautious, and for good reasons.

They worry about things like:

- Losing control over what is being tested

- Agents interacting with production systems by accident

- Noise drowning out real issues

- Sensitive data being handled in unclear ways

- Tools behaving like black boxes they cannot explain internally

Those concerns are valid, especially because a lot of what gets labeled as “AI pentesting” today doesn’t help build confidence here.

Some tools are DAST with an LLM added on top. Others are checklist-based systems where agents test one issue after another. Both approaches are limited, and neither prepares you for what happens when systems act autonomously.

True AI penetration testing is different, and that difference changes the safety bar.

What Changes with True AI Penetration Testing

Unlike scanners or instruction-following tools, true AI penetration testing systems:

- Make autonomous decisions

- Execute real tools and commands

- Interact with live applications and APIs

- Adapt their behavior based on feedback

- Often run at scale with many agents in parallel

Once you reach this level of autonomy, intent and instructions are no longer enough. Safety has to be enforced technically, even when the system behaves in unexpected ways.

That leads to a simple question.

What Does “Safe” AI Pentesting Actually Require?

Based on operating AI pentesting systems in practice, a clear baseline starts to emerge. These are the requirements we believe should exist before AI pentesting is considered safe to run at all.

This list is intentionally concrete. Each requirement describes something that can be verified, enforced, or audited, not a principle or a best practice.

1. Ownership Validation and Abuse Prevention

An AI pentesting system must only be usable against assets the operator owns or is explicitly authorized to test.

At a minimum:

- Ownership must be verified before testing begins

- Authorization must be enforced technically, not through user declarations

Without this, an AI pentesting platform becomes a general attack tool. Safety starts before the first request is ever sent.

2. Network-level Scope Enforcement

Agents will drift eventually. This is expected behavior, not a bug.

Because of that:

- Every outbound request must be inspected programmatically

- Targets must be explicitly allowlisted

- All non-authorized destinations must be blocked by default

Scope enforcement cannot rely on prompts or instructions. It has to happen at the network level, on every request.

Example:

- Agents instructed to test a staging environment will sometimes attempt to follow links to production. Without network enforcement, that mistake reaches the target. With it, the request is blocked before it leaves the system.

3. Isolation Between Reasoning and Execution

Agentic pentesting systems execute real tools such as bash commands or Python scripts. That introduces execution risk.

Minimum safety requirements include:

- Strict separation between agent reasoning and tool execution

- Sandboxed execution environments

- Isolation between agents and between customers

If an agent misbehaves or is manipulated, execution must remain fully contained.

Example:

- Early command execution attempts may appear successful but actually run locally. Validation and isolation prevent these results from being misinterpreted or escalating beyond the sandbox.

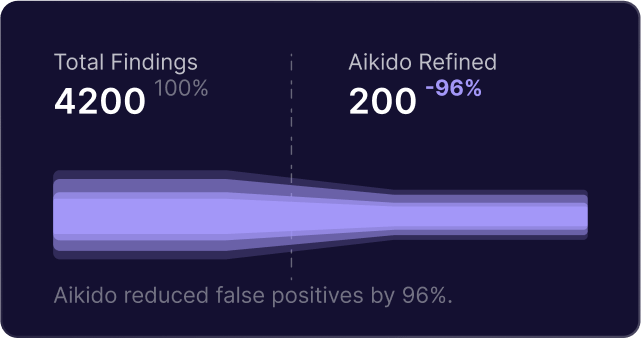

4. Validation and False Positive Control

Autonomous systems will generate hypotheses that are wrong. That is expected.

A safe system must:

- Treat initial findings as hypotheses

- Reproduce behavior before reporting

- Use validation logic that is separate from discovery

Without this, engineers are overwhelmed by noise and real issues are missed.

Example:

- An agent flags a potential SQL injection due to delayed responses. A validation step replays the request with varying payloads and rejects the finding when delays do not scale consistently.

5. Full Observability and Emergency Controls

AI pentesting must not be a black box.

Operators need to be able to:

- Inspect every action taken by agents

- Monitor behavior in real time

- Immediately halt all activity if something looks wrong

Emergency stop mechanisms are a baseline safety requirement, not an advanced feature.

6. Data Residency and Processing Guarantees

AI pentesting systems handle sensitive application data.

Minimum requirements include:

- Clear guarantees on where data is processed and stored

- Regional isolation when required

- No cross-region data movement by default

Without this, many organizations cannot adopt AI pentesting regardless of technical capability.

7. Prompt Injection Containment

Agents interact with untrusted application content by design. Prompt injection should be expected.

Safe systems must:

- Restrict access to uncontrolled external data sources

- Prevent data exfiltration paths

- Isolate execution environments so injected instructions cannot escape scope

Prompt injection is not an edge case. It is part of the threat model.

What This Does and Does Not Promise

Autonomous systems, just like humans, will miss some issues.

The goal is not perfection. The goal is to surface materially exploitable risk faster, more safely, and at greater scale than existing point-in-time testing models.

Why We Published a Safety Standard

We kept having the same conversations with security teams.

They were not asking for more AI. They were asking how to evaluate whether a system was safe to run at all.

Until there is a shared baseline, teams are left guessing whether AI pentesting tools are operating responsibly or simply assuming safety away.

So we wrote down what we believe is the minimum bar. Not a product checklist. Not a comparison. A set of enforceable requirements that teams can use to evaluate tools and ask better questions.

Read the Full Safety Standard

If you want a concise, vendor-neutral version of this list that you can share internally or use when evaluating tools, we published it as a PDF.

It also includes an appendix showing how one implementation, Aikido Attack, maps to these requirements for transparency.

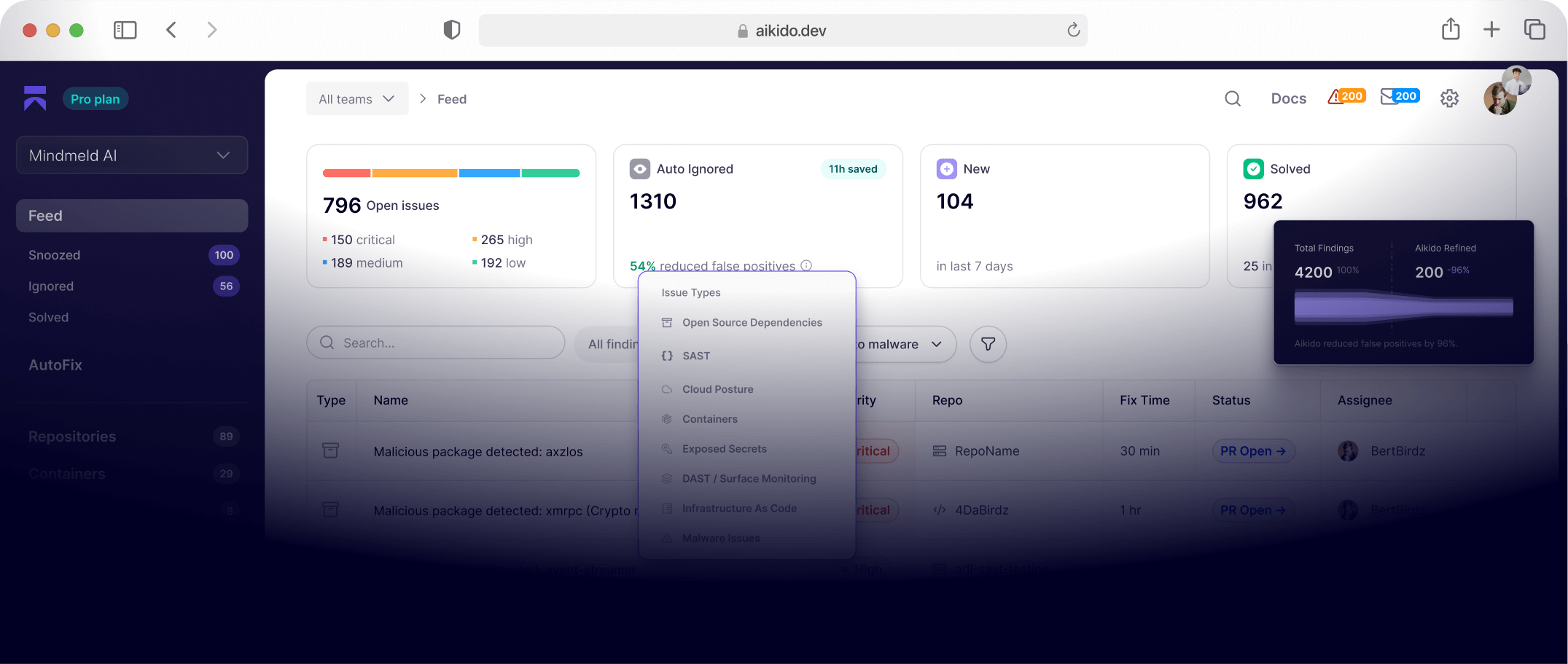

See How This Works in Practice

If you’re curious how these safety requirements are implemented in a real AI pentesting system, you can also take a look at Aikido Attack, our approach to AI-driven security testing.

It was built to meet these constraints, based on what becomes necessary once AI pentesting systems operate against real applications at scale.

You can explore how it works, or use this list to evaluate any tool you’re considering.

Secure your software now